The world of chatbots and Large Language Models (LLMs) has recently undergone a spectacular evolution. With ChatGPT, developed by OpenAI, being one of the most notable examples, the technology has managed to reach over 1.000.000 users in just five days. This rise underlines the growing interest in conversational AI and the unprecedented possibilities that LLMs offer.

LLMs and ChatGPT: A Short Introduction

Large Language Models (LLMs) and chatbots are concepts that have become indispensable in the world of artificial intelligence these days. They represent the future of human-computer interaction, where LLMs are powerful AI models that understand and generate natural language, while chatbots are programs that can simulate human conversations and perform tasks based on textual input. ChatGPT, one of the notable chatbots, has gained immense popularity in a short period of time.

LangChain: the Bridge to LLM Based Applications

LangChain is one of the frameworks that enables to leverage the power of LLMs for developing and supporting applications. This open-source library, initiated by Harrison Chase, offers a generic way to address different LLMs and extend them with new data and functionalities. Currently available in Python and TypeScript/JavaScript, LangChain is designed to easily create connections between different LLMs and data environments.

LangChain Core Concepts

To fully understand LangChain, we need to explore some core concepts:

- Chains: LangChain is built on the concept of a chain. A chain is simply a generic sequence of modular components. These chains can be put together for specific use cases by selecting the right components.

- LLMChain: The most common type of chain within LangChain is the LLMChain. This consists of a PromptTemplate, a Model (which can be an LLM or a chat model) and an optional OutputParser.

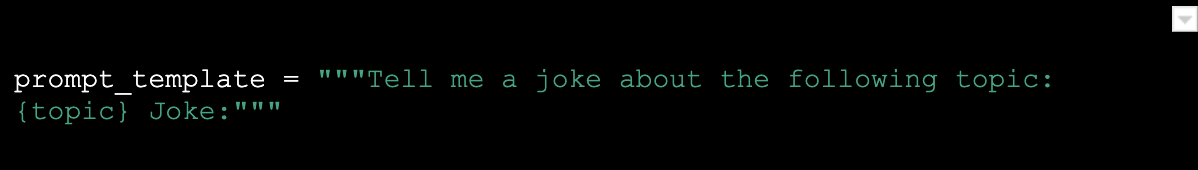

A PromptTemplate is a template used to generate a prompt for the LLM. Here's an example:

This template allows the user to fill in a topic, after which the completed prompt is sent as input to the model.

LangChain also offers ready-made PromptTemplates, such as Zero Shot, One Shot and Few Shot prompts. - Model and OutputParser: A model is the implementation of an LLM model itself. LangChain has several implementations for LLM models, including OpenAI, GPT4All, and HuggingFace.

It is also possible to add an OutputParser to process the output of the LLM model. For example, a ListOutputParser is available to convert the output of the LLM model into a list in the current programming language.

Data Connectivity in LangChain

To give the LLM Chain access to specific data, such as internal data or customer information, LangChain uses several concepts:

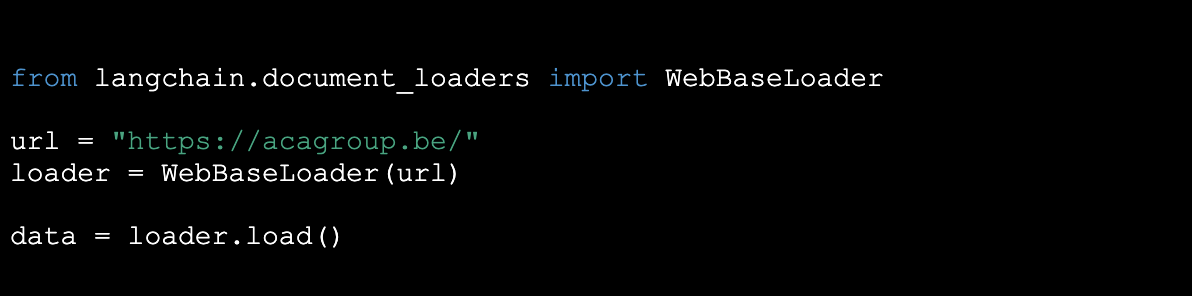

- Document Loaders

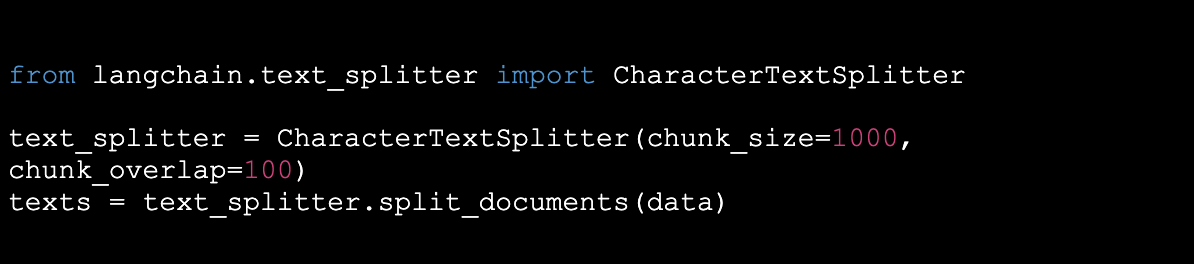

Document Loaders allow LangChain to retrieve data from various sources, such as CSV files and URLs. - Text Splitter

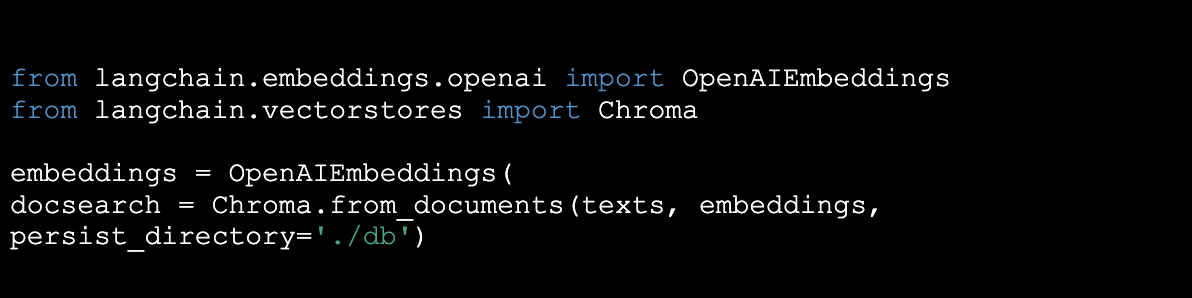

This tool splits documents into smaller pieces to make them easier to process by LLM models, taking into account limitations such as token limits. - Embeddings

LangChain offers several integrations for converting textual data into numerical data, making it easier to compare and process. The popular OpenAI Embeddings is an example of this. - VectorStores

This is where the embedded textual data is stored. These could, for example, be data vector stores, where the vectors represent the embedded textual data. FAISS (from Meta) and ChromaDB are some more popular examples. - Retrievers

Retrievers make the connection between the LLM model and the data in VectorStores. They retrieve relevant data and expand the prompt with the necessary context, allowing context-aware questions and assignments.

An example of such a context-aware prompt looks like this:

Demo Application

To illustrate the power of LangChain, we can create a demo application that follows these steps:

- Retrieve data based on a URL.

- Split the data into manageable blocks.

- Store the data in a vector database.

- Granting an LLM access to the vector database.

- Create a Streamlit application that gives users access to the LLM.

Below we show how to perform these steps in code:

1. Retrieve Data

Fortunately, retrieving data from a website with LangChain does not require any manual work. Here's how we do it:

2. Split Data

The resulting data field above now contains a collection of pages from the website. These pages contain a lot of information, sometimes too much for the LLM to work with, as many LLMs work with a limited number of tokens. Therefore, we need to split up the documents:

3. Store Data

Now that the data has been broken down into smaller contextual fragments, to provide efficient access to this data to the LLM, we store it in a vector database. In this example we use Chroma:

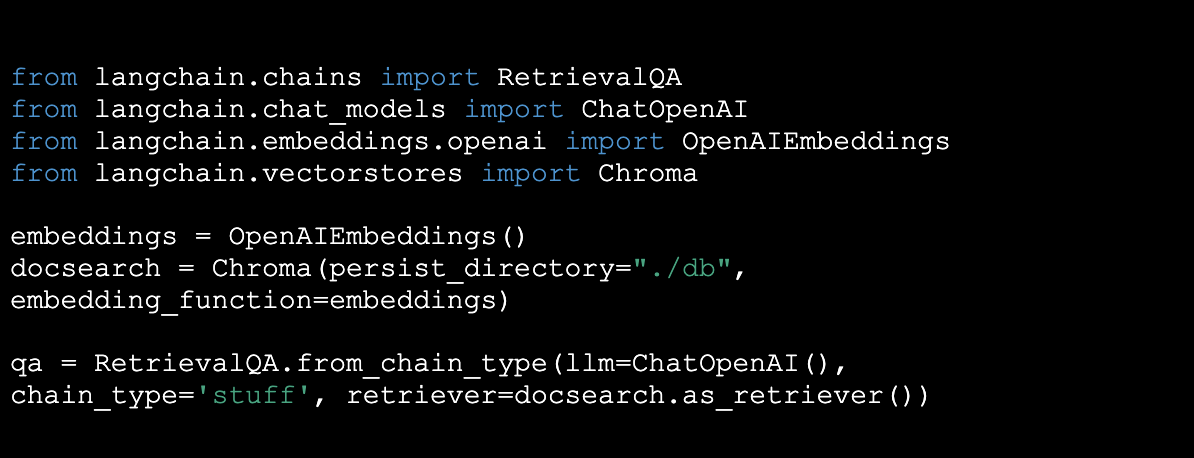

4. Grant Acces

Now that the data is saved, we can build a "Chain" in LangChain. A chain is simply a series of LLM executions to achieve the desired outcome. For this example we use the existing RetrievalQA chain that LangChain offers. This chain retrieves relevant contextual fragments from the newly built database, processes them together with the question in an LLM and delivers the desired answer:

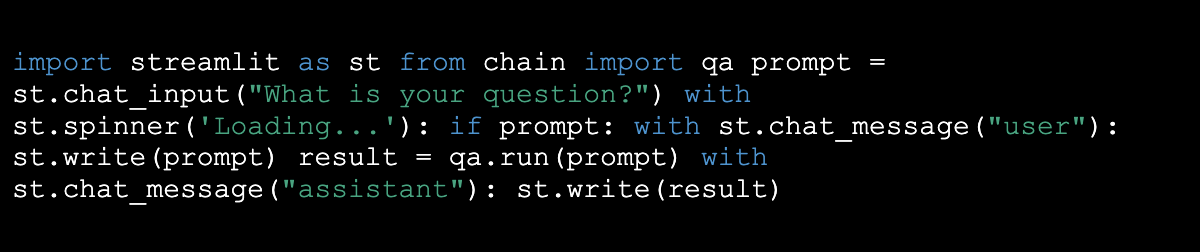

5. Create Streamlit Application

Now that we've given the LLM access to the data, we need to provide a way for the user to consult the LLM. To do this efficiently, we use Streamlit:

Agents and Tools

In addition to the standard chains, LangChain also offers the option to create Agents for more advanced applications. Agents have access to various tools that perform specific functionalities. These tools can be anything from a "Google Search" tool to Wolfram Alpha, a tool for solving complex mathematical problems. This allows Agents to provide more advanced reasoning applications, deciding which tool to use to answer a question.

Alternatives for LangChain

Although LangChain is a powerful framework for building LLM-driven applications, there are other alternatives available. For example, a popular tool is LlamaIndex (formerly known as GPT Index), which focuses on connecting LLMs with external data. LangChain, on the other hand, offers a more complete framework for building applications with LLMs, including tools and plugins.

Conclusion

LangChain is an exciting framework that opens the doors to a new world of conversational AI and application development with Large Language Models. With the ability to connect LLMs to various data sources and the flexibility to build complex applications, LangChain promises to become an essential tool for developers and businesses looking to take advantage of the power of LLMs. The future of conversational AI is looking bright, and LangChain plays a crucial role in this evolution.

What others have also read

At ACA, Ship-IT Days are no-nonsense innovation days.

Read more

November 30, 2023 marked a highly anticipated day for numerous ACA employees. Because on Ship-IT Day, nine teams of ACA team members, whether or not supplemented with customer experts, delved into creating inventive solutions for customer challenges or for ACA Group itself. The hackathon proved to be both inspiring and productive, with at the end a deserved winner! The atmosphere in the ACA office in Hasselt was sizzling right from the early start. Eight out of the nine project teams were stationed here. During the coffee cake breakfast, you immediately felt that it was going to be an extraordinary day. There was a palpable sense of excitement among the project team members , as well as a desire to tackle the complex challenges ahead. 9 innovative projects for internal and external challenges 🚀 After breakfast, the eight project teams swarmed to their working habitat for the day. The ninth team competed in the ACA office in Leuven. We list the teams here: Chatbot course integration in customer portal System integration tests in a CI/CD pipeline Onboarding portal/platform including gamification Automatic dubbing, transcription and summary of conversations publiq film offering data import via ML SMOCS, Low level mock management system Composable data processing architecture Virtual employees Automated invoicing If you want to know more about the scope of the different project teams, read our first blog article Ship-IT Day 2023: all projects at a glance . Sensing the atmosphere in the teams Right before noon, we wondered how the teams had started and how their work was evolving. And so we went to take a quick look... 👀 1. Chatbot course integration in customer portal “After a short kick-off meeting with the customer, we divided the tasks and got to work straight away,” says Bernd Van Velsen. “The atmosphere is great and at the end of the day, we hope to present a result that will inspire the customer . In the best case, we will soon be able to use AI tools in a real customer project with the aim of making more optimal use of the customer's many data.” “The Ship-IT Day is an annual tradition that I like to participate in,” says Bernd. “Not only because it is great to collaborate with colleagues from other departments, but also because it is super educational.” 2. System integration tests in a CI/CD pipeline “We want to demonstrate that we can perform click tests in the frontend in an existing environment and verify whether everything works together properly,” says Stef Noten. “We can currently run the necessary tests locally, so we are good on schedule. The next step is to also make this work in our build pipeline. At the end of the day, we hope we will be able to run the tests either manually or scheduled on the latest version of the backend and frontend .” 3. Onboarding portal/platform including gamification The members of this project team all started at ACA fairly recently. And that is exactly what brought them together, because their goal was to develop a platform that makes the onboarding process for new employees more efficient and fun . Dieter Vennekens shared his enthusiasm with us, stating, "We kicked off with a brainstorming session to define the platform's requirements and goals. Subsequently, we reviewed these with the key users to ensure the final product aligns with their expectations. Our aim is to establish the basic structure before lunch, allowing us to focus on development and styling intensively in the afternoon. By the day's end, our objective is to unveil a functional prototype. This project serves as an opportunity to showcase the capabilities of Low-Code .” 4. Automatic dubbing, transcription and summary of conversations Upon entering their meeting room, we found the project team engrossed in their work, and Katrien Gistelinck provided a concise explanation for their business. "Our project is essentially divided into two aspects. Firstly, we aim to develop an automatic transcription and summary of a conversation . Concurrently, we are working on the live dubbing of a conversation, although we're uncertain about the feasibility of the latter within the day. It might be a tad ambitious, but we are determined to give it a try." She continued, "This morning, our focus was on defining the user flow and selecting the tools we'll utilize. Currently, multiple tasks are progressing simultaneously, addressing both the UI and backend components." 5. Publiq film offering data import via ML Comprising six publiq employees and three from ACA, this team engaged in an introductory round followed by a discussion of the project approach at the whiteboard. They then allocated tasks among themselves. Peter Jans mentioned, "Everyone is diligently working on their assigned tasks, and we maintain continuous communication. The atmosphere is positive, and we even took a group photo! Collaborating with the customer on a solution to a specific challenge for an entire day is energizing. " "At the close of the day, our objective is to present a functional demo showcasing the AI and ML (Machine Learning) processing of an email attachment, followed by the upload of the data to the UIT database. The outcome should be accessible on uitinvlaanderen.be ." Peter adds optimistically, "We're aiming for the win." That's the spirit, Peter! 6. SMOCS, Low level mock management system Upon our arrival, the SMOCS team was deeply engrossed in their discussions, making us hesitant to interrupt. Eventually, they graciously took the time to address our questions, and the atmosphere was undoubtedly positive. "We initiated the process with a brief brainstorming session at the whiteboard. After establishing our priorities, we allocated tasks accordingly. Currently, we are on track with our schedule: the design phase is largely completed, and substantial progress has been made with the API. We conduct a status check every hour, making adjustments as needed," they shared. "By the end of the day, our aim is to showcase an initial version of SMOCS , complete with a dashboard offering a comprehensive overview of the sent requests along with associated responses that we can adjust. Additionally, we have high hopes that the customized response will also show up in the end-user application." 7. Composable data processing architecture This project team aims to establish a basic architecture applicable to similar projects often centered around data collection and processing. Currently, customers typically start projects from scratch, while many building blocks could be reused via platform engineering and composable data. “Although time flies very quickly, we have already collected a lot of good ideas,” says Christopher Scheerlinck. “What do we want to present later? A very complex scheme that no one understands (laughs). No, we aspire to showcase our concepts for realizing a reusable architecture , which we can later pitch to the customer. Given that we can't provide a demo akin to other teams, we've already come to terms with the likelihood of securing second place!" 8. Virtual employees This team may have been the smallest of them all, but a lot of work had already been done just before noon. “This morning we first had a short meeting with the customer to discuss their expectations,” Remco Goyvaerts explains. “We then identified the priority tasks and both of us quickly got to work. The goal is to develop a virtual colleague who can be fed with new information based on AI and ML . This virtual colleague can help new employees find certain information without having to disturb other employees. I am sure that we will be able to show something beautiful, so at the moment the stress is well under control.” Chatbot technology is becoming more and more popular. Remco sees this Ship-IT project as the ideal opportunity to learn more about applications with long-term memory. “The Ship-It Day is a fantastic initiative,” says Remco. “It's wonderful to have the opportunity to break away from the routine work structure and explore innovative ideas.” 9. Automated invoicing The client involved in this project handles 50,000 invoices annually in various languages. The objective is to extract accurate information from these invoices, translate it into the appropriate language, and convert it into a format easily manageable for the customer . “Although we started quite late, we have already made great progress,” notes Bram Meerten. "We can already send the invoice to Azure, which extracts the necessary data reasonably well. Subsequently, we transmit that data to ChatGPT, yielding great results. Our focus now is on visualizing it in a frontend. The next phase involves implementing additional checks and solutions for line information that isn't processed correctly." Bram expresses enthusiasm for the Ship-IT Day concept, stating, "It's fun to start from scratch in the morning and present a functional solution at the end of the day. While it may not be finished to perfection, it will certainly be a nice prototype." And the winner is …. 🏆 At 5 p.m., the moment had arrived... Each team had the opportunity to showcase their accomplishments in a 5-minute pitch, followed by a voting session where everyone present could choose their favorite. All teams successfully presented a functional prototype addressing their customer's challenges. While the SMOCS team may not have managed to visualize their solution, they introduced additional business ideas with the SMOCintosh and the SMOCS-to-go food concept. However, these ideas fell just short of securing victory. In a thrilling final showdown, the team working on the onboarding platform for ACA came out as the winners! Under the name NACA (New at ACA), they presented an impressive prototype of the onboarding platform, where employees gradually build a rocket while progressing through their onboarding journey. Not only was the functionality noteworthy, but the user interface also received high praise. Congratulations to the well-deserving winners! Enjoy your shopping and dinner vouchers. 🤩 See you next year!

Read more

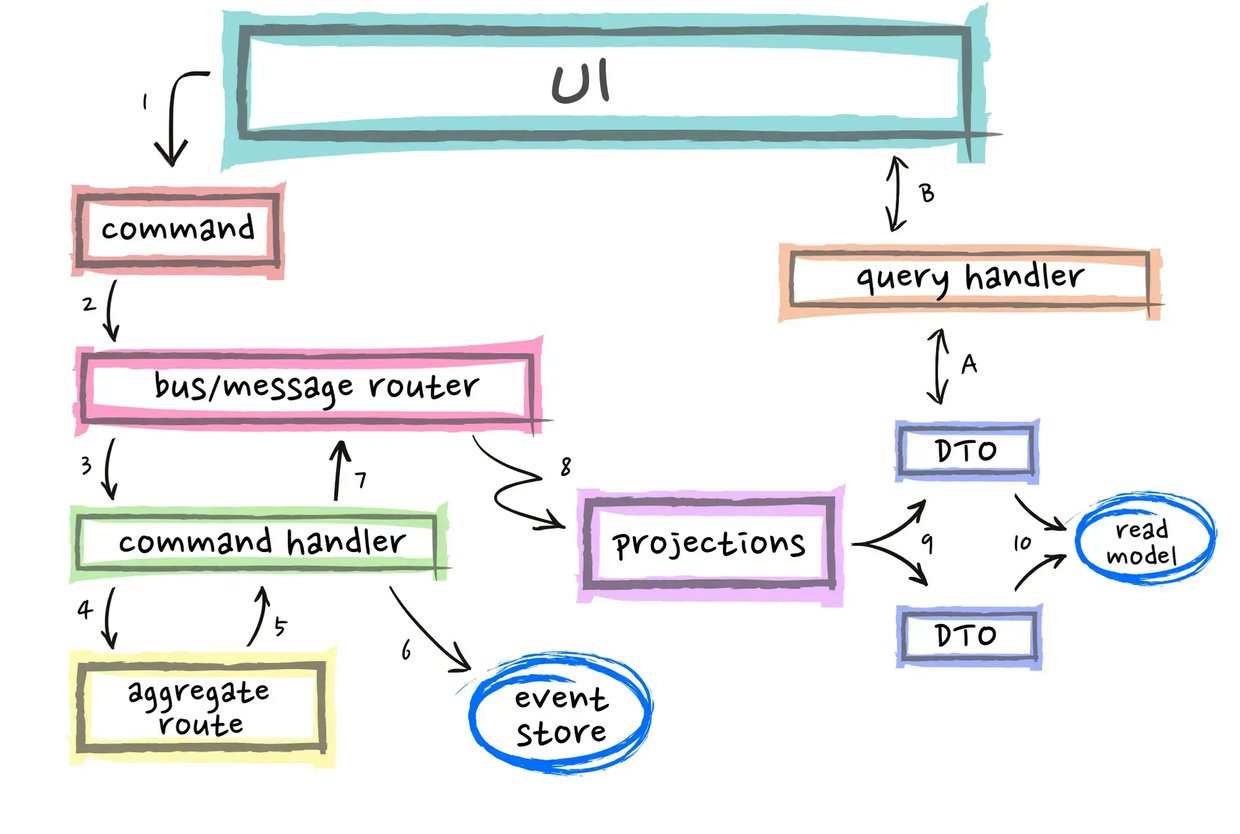

Staying current with the latest trends and best practices is crucial in the rapidly evolving world of software development. Innovative approaches like EventSourcing and CQRS can enable developers to build flexible, scalable, and secure systems. At Domain-Driven Design (DDD) Europe 2022 , Paolo Banfi delivered an enlightening talk on these two techniques. What is EventSourcing? EventSourcing is an innovative approach to data storage that prioritises the historical context of an object. Rather than just capturing the present state of an object, EventSourcing stores all the events that led to that state. Creating a well-designed event model is critical when implementing EventSourcing. The event model defines the events that will be stored and how they will be structured. Careful planning of the event model is crucial because it affects the ease of data analysis. Modifying the event model after implementation can be tough, so it's important to get it right from the beginning. What is CQRS CQRS (Command Query Responsibility Segregation) is a technique that separates read and write operations in a system to improve efficiency and understandability. In a traditional architecture, an application typically interacts with a database using a single interface. However, CQRS separates the read and write operations, each of which is handled by different components. Combining EventSourcing and CQRS One of the advantages of combining EventSourcing and CQRS is that it facilitates change tracking and data auditing. By keeping track of all the events that led to a particular state, it's easier to track changes over time. This can be particularly useful for applications that require auditing or regulation. Moreover, separating read and write operations in this way provides several benefits. Firstly, it optimises the system by reducing contention and improving scalability. Secondly, it simplifies the system by isolating the concerns of each side. Finally, it enhances the security of sensitive data by limiting access to the write side of the system. Another significant advantage of implementing CQRS is the elimination of the need to traverse the entire event stream to determine the current state. By separating read and write operations, the read side of the system can maintain dedicated models optimised for querying and retrieving specific data views. As a result, when querying the system for the latest state, there is no longer a requirement to traverse the entire event stream. Instead, the optimised read models can efficiently provide the necessary data, leading to improved performance and reduced latency. When to use EventSourcind and CQRS It's important to note that EventSourcing and CQRS may not be suitable for every project. Implementing EventSourcing and CQRS can require more work upfront compared to traditional approaches. Developers need to invest time in understanding and implementing these approaches effectively. However, for systems that demand high scalability, flexibility or security, EventSourcing and CQRS can provide an excellent solution. Deciding whether to use CQRS or EventSourcing for your application depends on various factors, such as the complexity of your domain model, the scalability requirements, and the need for a comprehensive audit trail of system events. Developers must evaluate the specific needs of their project before deciding whether to use these approaches. CQRS is particularly useful for applications with complex domain models that require different data views for different use cases. By separating the read and write operations into distinct models, you can optimise the read operations for performance and scalability, while still maintaining a single source of truth for the data. Event Sourcing is ideal when you need to maintain a complete and accurate record of all changes to your system over time. By capturing every event as it occurs and storing it in an append-only log, you can create an immutable audit trail that can be used for debugging, compliance, and other purposes. Conclusion The combination of EventSourcing and CQRS can provide developers with significant benefits, such as increased flexibility, scalability and security. They offer a fresh approach to software development that can help developers create applications that are more in line with the needs of modern organisations. If you're interested in learning more about EventSourcing and CQRS, there are plenty of excellent resources available online. Conferences and talks like DDD Europe are also excellent opportunities to stay up-to-date on the latest trends and best practices in software development. Make sure not to miss out on these opportunities if you want to stay ahead of the game! The next edition of Domain-Driven Design Europe will take place in Amsterdam from the 5th to the 9th of June 2023. Did you know that ACA Group is one of the proud sponsors of DDD Europe? {% module_block module "widget_bc90125a-7f60-4a63-bddb-c60cc6f4ee41" %}{% module_attribute "buttons" is_json="true" %}{% raw %}[{"appearance":{"link_color":"light","primary_color":"primary","secondary_color":"primary","tertiary_color":"light","tertiary_icon_accent_color":"dark","tertiary_text_color":"dark","variant":"primary"},"content":{"arrow":"right","icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"tertiary_icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"text":"More about ACA Group"},"target":{"link":{"no_follow":false,"open_in_new_tab":false,"rel":"","sponsored":false,"url":{"content_id":null,"href":"https://acagroup.be/en/aca-as-a-company/","href_with_scheme":"https://acagroup.be/en/aca-as-a-company/","type":"EXTERNAL"},"user_generated_content":false}},"type":"normal"}]{% endraw %}{% end_module_attribute %}{% module_attribute "child_css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "definition_id" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "field_types" is_json="true" %}{% raw %}{"buttons":"group","styles":"group"}{% endraw %}{% end_module_attribute %}{% module_attribute "isJsModule" is_json="true" %}{% raw %}true{% endraw %}{% end_module_attribute %}{% module_attribute "label" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "module_id" is_json="true" %}{% raw %}201493994716{% endraw %}{% end_module_attribute %}{% module_attribute "path" is_json="true" %}{% raw %}"@projects/aca-group-project/aca-group-app/components/modules/ButtonGroup"{% endraw %}{% end_module_attribute %}{% module_attribute "schema_version" is_json="true" %}{% raw %}2{% endraw %}{% end_module_attribute %}{% module_attribute "smart_objects" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "smart_type" is_json="true" %}{% raw %}"NOT_SMART"{% endraw %}{% end_module_attribute %}{% module_attribute "tag" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "type" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "wrap_field_tag" is_json="true" %}{% raw %}"div"{% endraw %}{% end_module_attribute %}{% end_module_block %}

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!