In the near future, Artificial Intelligence (AI) will bring your company to the next level. Increasing productivity, use of resources, maintainability, staffing efficiency and much more. But before that can happen, you need to collect data and provide enough examples to train your AI algorithms. Whether your company is active in the financial sector or the medical sector, whether you’re focused on warehousing or garbage disposal, every company has one thing in common: data already flows through the organization.

This blog post aims to make you aware of the importance of data collection as a stepping stone to Artificial Intelligence. Only when your data is visible, adequate, and complemented with external data and representative for your demographic, can you profit from positive opportunities that present themselves in today’s world and enables you to make better business decisions.

What is Artificial Intelligence?

Artificial Intelligence (AI) in its simplest form is the imitation of human intelligence by a machine. In other words, it enables programs to make human-like decisions and follow human-like reasoning. A popular subdomain of Artificial Intelligence is Machine Learning. Instead of explicitly programming a set of rules, Machine Learning applications deduct patterns from examples and ‘learn’ how things work.

Unhide your data

Accessible data can be put to good use. Surely somebody knows how many people are working for your company, how much inventory you keep, how much stock you’ve been moving over the last couple of months, and how your factory scores on efficiency and productivity. But what happens with this data once it has been acquired? A nice presentation to the board? Are these numbers stored somewhere in the cloud? Perhaps they are available in a centralized database? Or worst of all, perhaps they are in an Excel file on a private drive collecting dust?

In many companies, only a limited number of people have access to certain assets. Since this implies that data is isolated from the rest of the organization, we call them information silos. Not only does this imply distrust in the organization, it provides a limitation to the team or application processing the data. For the same data, there might be different interpretations between teams, or a correlation between features might remain hidden because the data is distributed over different silos.

There’s a big advantage when data is generally available in a standardized way. Not only can you rely on the trustworthiness of the source, you can guarantee a minimum of quality and completeness. If you build a company culture centered around data and start collecting that data in a uniformed way today, it will fuel your artificial intelligence tomorrow.

Keep more than just YOUR data

Although predicting the future is never certain, you can avoid surprises by incorporating external factors. For instance, when you’re selling electric cars, an increasing oil price might have a positive influence on your sales. A change of government policy on the other hand might have a negative influence. A heat wave might require that your employees get more breaks to prevent exhaustion, which has an influence on productivity. Even annotating data with company initiatives can be beneficial: marketing campaigns (hopefully) result in increased visibility of your organization and solutions, which leads to more sales. That’s why the numbers of your organization should be stored together with external facts and figures that impact the processes which are valuable for your business.

A machine learning algorithm can easily consider these extra parameters to extract a connection between multiple sets of data. It’s able to make a distinction between seasonal effects, the effect of climatic conditions and a general trend of increasing sales.

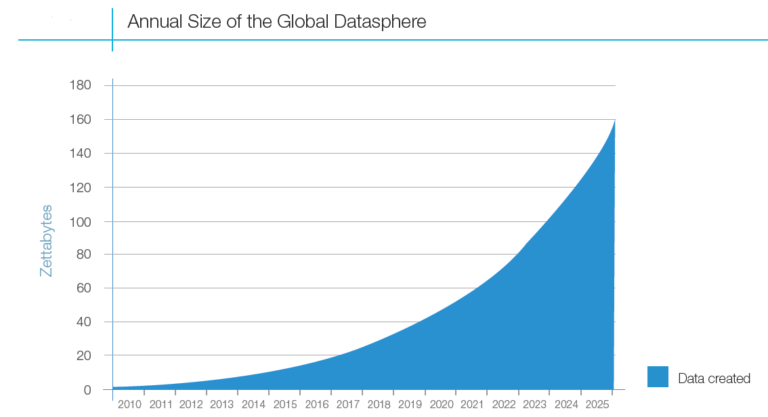

Centralizing decision-making around company data is important, but so is external data: the world around us changes constantly. Be prepared to collect a LOT of data.

Be wary of biased data

There are many examples of where data mining has wrongfully concluded the significance of a certain input feature. Having a complete representation of your inventory or customer base is vital to the impact of data analysis. Besides that, normalization of your input can prevent that your model ever becomes aware of unwanted features. A neural network designed to detect skin cancer was able to identify a correlation between the presence of a ruler next to a tumor when analysing pictures. In an attempt to classify wolves and huskies, scientists deliberately selected images with a specific background to train their algorithm. Thus proving that biased data leads to an inaccurate machine learning model. This is a difficulty that even experienced data scientists face. No wonder experts say they spend more time preparing the data than designing models and training them…

"It makes more sense to worry about the data and be less picky about what algorithm to apply."

– Artificial Intelligence: A Modern Approach (S. Russell and P. Norvig)

Even though collected data is very valuable for your company, you probably didn’t collect it with use for AI applications in mind. It therefore probably contains disruptive features which will influence the learning process. It’s vital to reflect on and asses your data collection from here on out if you want to prepare it for use in AI applications.

Takeaway

More and more companies are changing their process to be data-driven in order to have a competitive advantage. For one to understand how certain aspects influence your productivity, it’s important to collect high quality data. When your sources are reliable and you have a suitable application to present insightful patterns, you can use this to support business decisions.

Today, the hard part is not collecting the data. There are enough tools that will help you do just that. The real challenge lies in the structuring and capturing of the right data. Finding a solution that fits for your specific case isn’t easy, but you can start by setting up a database or data warehouse, thinking about how you’ll structure your data, and then applying it. If you need help or if you have questions, click here to contact us and shoot us a message!

Take action today, because knowing how to realize this takes time and practice. Prepare your company for a data-driven culture and start building knowledge on machine learning to leverage the potential benefit you gain from your data.

What others have also read

At ACA, Ship-IT Days are no-nonsense innovation days.

Read more

Whether we unlock our phones with facial recognition, shout voice commands to our smart devices from across the room or get served a list of movies we might like… machine learning has in many cases changed our lives for the better. However, as with many great technologies, it has its dark side as well. A major one being the massive, often unregulated, collection and processing of personal data. Sometimes it seems that for every positive story, there’s a negative one about our privacy being at risk . It’s clear that we are forced to give privacy the attention it deserves. Today I’d like to talk about how we can use machine learning applications without privacy concerns and worrying that private information might become public . Machine learning with edge devices By placing the intelligence on edge devices on premise, we can ensure that certain information does not leave the sensor that captures it. An edge device is a piece of hardware that is used to process data closely to its source. Instead of sending videos or sound to a centralized processor, they are dealt with on the machine itself. In other words, you avoid transferring all this data to an external application or a cloud-based service. Edge devices are often used to reduce latency. Instead of waiting for the data to travel across a network, you get an immediate result. Another reason to employ an edge device is to reduce the cost of bandwidth. Devices that are using a mobile network might not operate well in rural areas. Self-driving cars, for example, take full advantage of both these reasons. Sending each video capture to a central server would be too time-consuming and the total latency would interfere with the quick reactions we expect from an autonomous vehicle. Even though these are important aspects to consider, the focus of this blog post is privacy. With the General Data Protection Regulation (GDPR) put in effect by the European Parliament in 2018, people have become more aware of how their personal information is used . Companies have to ask consent to store and process this information. Even more, violations of this regulation, for instance by not taking adequate security measures to protect personal data, can result in large fines. This is where edge devices excel. They can immediately process an image or a sound clip without the need for external storage or processing. Since they don’t store the raw data, this information becomes volatile. For instance, an edge device could use camera images to count the number of people in a room. If the camera image is processed on the device itself and only the size of the crowd is forwarded, everybody’s privacy remains guaranteed. Prototyping with Edge TPU Coral, a sub-brand of Google, is a platform that offers software and hardware tools to use machine learning. One of the hardware components they offer is the Coral Dev Board . It has been announced as “ Google’s answer to Raspberry Pi ”. The Coral Dev Board runs a Linux distribution based on Debian and has everything on board to prototype machine learning products. Central to the board is a Tensor Processing Unit (TPU) which has been created to run Tensorflow (Lite) operations in a power-efficient way. You can read about Tensorflow and how it helps enable fast machine learning in one of our previous blog posts . If you look closely at a machine learning process, you can identify two stages. The first stage is training a model from examples so that it can learn certain patterns. The second stage is to apply the model’s capabilities to new data. With the dev board above, the idea is that you train your model on cloud infrastructure. It makes sense, since this step usually requires a lot of computing power. Once all the elements of your model have been learned, they can be downloaded to the device using a dedicated compiler. The result is a little machine that can run a powerful artificial intelligence algorithm while disconnected from the cloud. Keeping data local with Federated Learning The process above might make you wonder about which data is used to train the machine learning model. There are a lot of publicly available datasets you can use for this step. In general these datasets are stored on a central server. To avoid this, you can use a technique called Federated Learning. Instead of having the central server train the entire model, several nodes or edge devices are doing this individually. Each node sends updates on the parameters they have learned, either to a central server (Single Party) or to each other in a peer-to-peer setup (Multi Party). All of these changes are then combined to create one global model. The biggest benefit to this setup is that the recorded (sensitive) data never leaves the local node . This has been used for example in Apple’s QuickType keyboard for predicting emojis , from the usage of a large number of users. Earlier this year, Google released TensorFlow Federated to create applications that learn from decentralized data. Takeaway At ACA we highly value privacy, and so do our customers. Keeping your personal data and sensitive information private is (y)our priority. With techniques like federated learning, we can help you unleash your AI potential without compromising on data security. Curious how exactly that would work in your organization? Send us an email through our contact form and we’ll soon be in touch.

Read more

The world of chatbots and Large Language Models (LLMs) has recently undergone a spectacular evolution. With ChatGPT, developed by OpenAI, being one of the most notable examples, the technology has managed to reach over 1.000.000 users in just five days. This rise underlines the growing interest in conversational AI and the unprecedented possibilities that LLMs offer. LLMs and ChatGPT: A Short Introduction Large Language Models (LLMs) and chatbots are concepts that have become indispensable in the world of artificial intelligence these days. They represent the future of human-computer interaction, where LLMs are powerful AI models that understand and generate natural language, while chatbots are programs that can simulate human conversations and perform tasks based on textual input. ChatGPT, one of the notable chatbots, has gained immense popularity in a short period of time. LangChain: the Bridge to LLM Based Applications LangChain is one of the frameworks that enables to leverage the power of LLMs for developing and supporting applications. This open-source library, initiated by Harrison Chase, offers a generic way to address different LLMs and extend them with new data and functionalities. Currently available in Python and TypeScript/JavaScript, LangChain is designed to easily create connections between different LLMs and data environments. LangChain Core Concepts To fully understand LangChain, we need to explore some core concepts: Chains: LangChain is built on the concept of a chain. A chain is simply a generic sequence of modular components. These chains can be put together for specific use cases by selecting the right components. LLMChain: The most common type of chain within LangChain is the LLMChain. This consists of a PromptTemplate, a Model (which can be an LLM or a chat model) and an optional OutputParser. A PromptTemplate is a template used to generate a prompt for the LLM. Here's an example: This template allows the user to fill in a topic, after which the completed prompt is sent as input to the model. LangChain also offers ready-made PromptTemplates, such as Zero Shot, One Shot and Few Shot prompts. Model and OutputParser: A model is the implementation of an LLM model itself. LangChain has several implementations for LLM models, including OpenAI, GPT4All, and HuggingFace. It is also possible to add an OutputParser to process the output of the LLM model. For example, a ListOutputParser is available to convert the output of the LLM model into a list in the current programming language. Data Connectivity in LangChain To give the LLM Chain access to specific data, such as internal data or customer information, LangChain uses several concepts: Document Loaders Document Loaders allow LangChain to retrieve data from various sources, such as CSV files and URLs. Text Splitter This tool splits documents into smaller pieces to make them easier to process by LLM models, taking into account limitations such as token limits. Embeddings LangChain offers several integrations for converting textual data into numerical data, making it easier to compare and process. The popular OpenAI Embeddings is an example of this. VectorStores This is where the embedded textual data is stored. These could, for example, be data vector stores, where the vectors represent the embedded textual data. FAISS (from Meta) and ChromaDB are some more popular examples. Retrievers Retrievers make the connection between the LLM model and the data in VectorStores. They retrieve relevant data and expand the prompt with the necessary context, allowing context-aware questions and assignments. An example of such a context-aware prompt looks like this: Demo Application To illustrate the power of LangChain, we can create a demo application that follows these steps: Retrieve data based on a URL. Split the data into manageable blocks. Store the data in a vector database. Granting an LLM access to the vector database. Create a Streamlit application that gives users access to the LLM. Below we show how to perform these steps in code: 1. Retrieve Data Fortunately, retrieving data from a website with LangChain does not require any manual work. Here's how we do it: 2. Split Data The resulting data field above now contains a collection of pages from the website. These pages contain a lot of information, sometimes too much for the LLM to work with, as many LLMs work with a limited number of tokens. Therefore, we need to split up the documents: 3. Store Data Now that the data has been broken down into smaller contextual fragments, to provide efficient access to this data to the LLM, we store it in a vector database. In this example we use Chroma: 4. Grant Acces Now that the data is saved, we can build a "Chain" in LangChain. A chain is simply a series of LLM executions to achieve the desired outcome. For this example we use the existing RetrievalQA chain that LangChain offers. This chain retrieves relevant contextual fragments from the newly built database, processes them together with the question in an LLM and delivers the desired answer: 5. Create Streamlit Application Now that we've given the LLM access to the data, we need to provide a way for the user to consult the LLM. To do this efficiently, we use Streamlit: Agents and Tools In addition to the standard chains, LangChain also offers the option to create Agents for more advanced applications. Agents have access to various tools that perform specific functionalities. These tools can be anything from a "Google Search" tool to Wolfram Alpha, a tool for solving complex mathematical problems. This allows Agents to provide more advanced reasoning applications, deciding which tool to use to answer a question. Alternatives for LangChain Although LangChain is a powerful framework for building LLM-driven applications, there are other alternatives available. For example, a popular tool is LlamaIndex (formerly known as GPT Index), which focuses on connecting LLMs with external data. LangChain, on the other hand, offers a more complete framework for building applications with LLMs, including tools and plugins. Conclusion LangChain is an exciting framework that opens the doors to a new world of conversational AI and application development with Large Language Models. With the ability to connect LLMs to various data sources and the flexibility to build complex applications, LangChain promises to become an essential tool for developers and businesses looking to take advantage of the power of LLMs. The future of conversational AI is looking bright, and LangChain plays a crucial role in this evolution.

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!