Whether we unlock our phones with facial recognition, shout voice commands to our smart devices from across the room or get served a list of movies we might like… machine learning has in many cases changed our lives for the better.

However, as with many great technologies, it has its dark side as well. A major one being the massive, often unregulated, collection and processing of personal data. Sometimes it seems that for every positive story, there’s a negative one about our privacy being at risk.

It’s clear that we are forced to give privacy the attention it deserves. Today I’d like to talk about how we can use machine learning applications without privacy concerns and worrying that private information might become public.

Machine learning with edge devices

By placing the intelligence on edge devices on premise, we can ensure that certain information does not leave the sensor that captures it. An edge device is a piece of hardware that is used to process data closely to its source. Instead of sending videos or sound to a centralized processor, they are dealt with on the machine itself. In other words, you avoid transferring all this data to an external application or a cloud-based service. Edge devices are often used to reduce latency. Instead of waiting for the data to travel across a network, you get an immediate result. Another reason to employ an edge device is to reduce the cost of bandwidth. Devices that are using a mobile network might not operate well in rural areas. Self-driving cars, for example, take full advantage of both these reasons. Sending each video capture to a central server would be too time-consuming and the total latency would interfere with the quick reactions we expect from an autonomous vehicle.

Even though these are important aspects to consider, the focus of this blog post is privacy. With the General Data Protection Regulation (GDPR) put in effect by the European Parliament in 2018, people have become more aware of how their personal information is used. Companies have to ask consent to store and process this information. Even more, violations of this regulation, for instance by not taking adequate security measures to protect personal data, can result in large fines.

This is where edge devices excel. They can immediately process an image or a sound clip without the need for external storage or processing. Since they don’t store the raw data, this information becomes volatile. For instance, an edge device could use camera images to count the number of people in a room. If the camera image is processed on the device itself and only the size of the crowd is forwarded, everybody’s privacy remains guaranteed.

Prototyping with Edge TPU

Coral, a sub-brand of Google, is a platform that offers software and hardware tools to use machine learning. One of the hardware components they offer is the Coral Dev Board. It has been announced as “Google’s answer to Raspberry Pi”.

The Coral Dev Board runs a Linux distribution based on Debian and has everything on board to prototype machine learning products. Central to the board is a Tensor Processing Unit (TPU) which has been created to run Tensorflow (Lite) operations in a power-efficient way. You can read about Tensorflow and how it helps enable fast machine learning in one of our previous blog posts.

If you look closely at a machine learning process, you can identify two stages. The first stage is training a model from examples so that it can learn certain patterns. The second stage is to apply the model’s capabilities to new data. With the dev board above, the idea is that you train your model on cloud infrastructure. It makes sense, since this step usually requires a lot of computing power. Once all the elements of your model have been learned, they can be downloaded to the device using a dedicated compiler. The result is a little machine that can run a powerful artificial intelligence algorithm while disconnected from the cloud.Keeping data local with Federated Learning

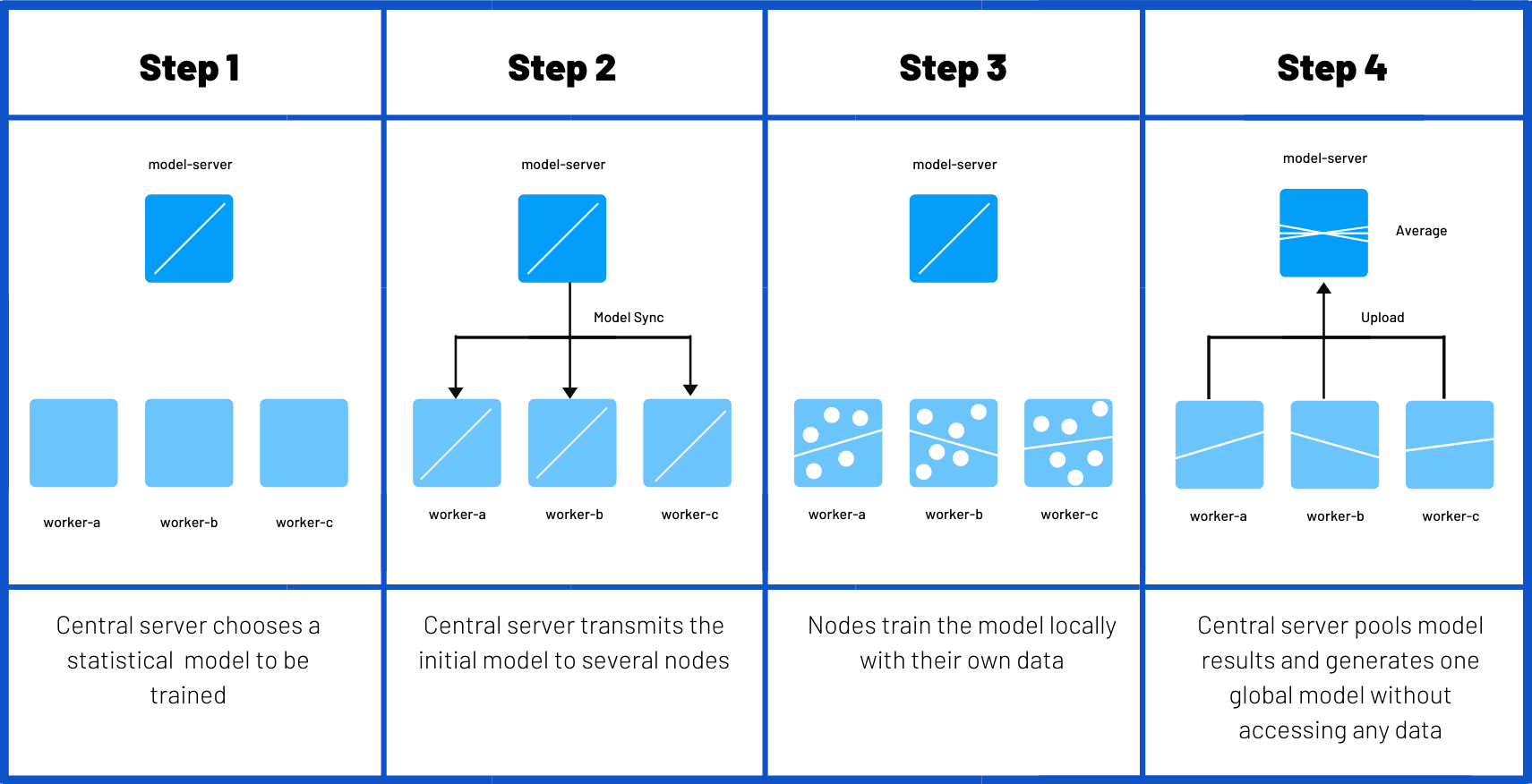

The process above might make you wonder about which data is used to train the machine learning model. There are a lot of publicly available datasets you can use for this step. In general these datasets are stored on a central server. To avoid this, you can use a technique called Federated Learning. Instead of having the central server train the entire model, several nodes or edge devices are doing this individually. Each node sends updates on the parameters they have learned, either to a central server (Single Party) or to each other in a peer-to-peer setup (Multi Party). All of these changes are then combined to create one global model.

The biggest benefit to this setup is that the recorded (sensitive) data never leaves the local node. This has been used for example in Apple’s QuickType keyboard for predicting emojis, from the usage of a large number of users. Earlier this year, Google released TensorFlow Federated to create applications that learn from decentralized data.

Takeaway

At ACA we highly value privacy, and so do our customers. Keeping your personal data and sensitive information private is (y)our priority. With techniques like federated learning, we can help you unleash your AI potential without compromising on data security. Curious how exactly that would work in your organization? Send us an email through our contact form and we’ll soon be in touch.

What others have also read

At ACA, Ship-IT Days are no-nonsense innovation days.

Read more

The world of chatbots and Large Language Models (LLMs) has recently undergone a spectacular evolution. With ChatGPT, developed by OpenAI, being one of the most notable examples, the technology has managed to reach over 1.000.000 users in just five days. This rise underlines the growing interest in conversational AI and the unprecedented possibilities that LLMs offer. LLMs and ChatGPT: A Short Introduction Large Language Models (LLMs) and chatbots are concepts that have become indispensable in the world of artificial intelligence these days. They represent the future of human-computer interaction, where LLMs are powerful AI models that understand and generate natural language, while chatbots are programs that can simulate human conversations and perform tasks based on textual input. ChatGPT, one of the notable chatbots, has gained immense popularity in a short period of time. LangChain: the Bridge to LLM Based Applications LangChain is one of the frameworks that enables to leverage the power of LLMs for developing and supporting applications. This open-source library, initiated by Harrison Chase, offers a generic way to address different LLMs and extend them with new data and functionalities. Currently available in Python and TypeScript/JavaScript, LangChain is designed to easily create connections between different LLMs and data environments. LangChain Core Concepts To fully understand LangChain, we need to explore some core concepts: Chains: LangChain is built on the concept of a chain. A chain is simply a generic sequence of modular components. These chains can be put together for specific use cases by selecting the right components. LLMChain: The most common type of chain within LangChain is the LLMChain. This consists of a PromptTemplate, a Model (which can be an LLM or a chat model) and an optional OutputParser. A PromptTemplate is a template used to generate a prompt for the LLM. Here's an example: This template allows the user to fill in a topic, after which the completed prompt is sent as input to the model. LangChain also offers ready-made PromptTemplates, such as Zero Shot, One Shot and Few Shot prompts. Model and OutputParser: A model is the implementation of an LLM model itself. LangChain has several implementations for LLM models, including OpenAI, GPT4All, and HuggingFace. It is also possible to add an OutputParser to process the output of the LLM model. For example, a ListOutputParser is available to convert the output of the LLM model into a list in the current programming language. Data Connectivity in LangChain To give the LLM Chain access to specific data, such as internal data or customer information, LangChain uses several concepts: Document Loaders Document Loaders allow LangChain to retrieve data from various sources, such as CSV files and URLs. Text Splitter This tool splits documents into smaller pieces to make them easier to process by LLM models, taking into account limitations such as token limits. Embeddings LangChain offers several integrations for converting textual data into numerical data, making it easier to compare and process. The popular OpenAI Embeddings is an example of this. VectorStores This is where the embedded textual data is stored. These could, for example, be data vector stores, where the vectors represent the embedded textual data. FAISS (from Meta) and ChromaDB are some more popular examples. Retrievers Retrievers make the connection between the LLM model and the data in VectorStores. They retrieve relevant data and expand the prompt with the necessary context, allowing context-aware questions and assignments. An example of such a context-aware prompt looks like this: Demo Application To illustrate the power of LangChain, we can create a demo application that follows these steps: Retrieve data based on a URL. Split the data into manageable blocks. Store the data in a vector database. Granting an LLM access to the vector database. Create a Streamlit application that gives users access to the LLM. Below we show how to perform these steps in code: 1. Retrieve Data Fortunately, retrieving data from a website with LangChain does not require any manual work. Here's how we do it: 2. Split Data The resulting data field above now contains a collection of pages from the website. These pages contain a lot of information, sometimes too much for the LLM to work with, as many LLMs work with a limited number of tokens. Therefore, we need to split up the documents: 3. Store Data Now that the data has been broken down into smaller contextual fragments, to provide efficient access to this data to the LLM, we store it in a vector database. In this example we use Chroma: 4. Grant Acces Now that the data is saved, we can build a "Chain" in LangChain. A chain is simply a series of LLM executions to achieve the desired outcome. For this example we use the existing RetrievalQA chain that LangChain offers. This chain retrieves relevant contextual fragments from the newly built database, processes them together with the question in an LLM and delivers the desired answer: 5. Create Streamlit Application Now that we've given the LLM access to the data, we need to provide a way for the user to consult the LLM. To do this efficiently, we use Streamlit: Agents and Tools In addition to the standard chains, LangChain also offers the option to create Agents for more advanced applications. Agents have access to various tools that perform specific functionalities. These tools can be anything from a "Google Search" tool to Wolfram Alpha, a tool for solving complex mathematical problems. This allows Agents to provide more advanced reasoning applications, deciding which tool to use to answer a question. Alternatives for LangChain Although LangChain is a powerful framework for building LLM-driven applications, there are other alternatives available. For example, a popular tool is LlamaIndex (formerly known as GPT Index), which focuses on connecting LLMs with external data. LangChain, on the other hand, offers a more complete framework for building applications with LLMs, including tools and plugins. Conclusion LangChain is an exciting framework that opens the doors to a new world of conversational AI and application development with Large Language Models. With the ability to connect LLMs to various data sources and the flexibility to build complex applications, LangChain promises to become an essential tool for developers and businesses looking to take advantage of the power of LLMs. The future of conversational AI is looking bright, and LangChain plays a crucial role in this evolution.

Read more

In this blog post, I would like to give you a high level overview of the OAuth 2 specification. When I started to learn about this, I got lost very quickly in all the different aspects that are involved. To make sure you don’t have to go through the same thing, I’ll explain OAuth 2 as if you don’t even have a technical background. Since there is a lot to cover, let’s jump right in! The core concepts of security When it comes to securing an application, there are 2 core concepts to keep in mind: authentication and authorization . Authentication With authentication, you’re trying to answer the question “Who is somebody?” or “Who is this user?” You have to look at it from the perspective of your application or your server. They basically have stranger danger. They don’t know who you are and there is no way for them to know that unless you prove your identity to them. So, authentication is the process of proving to the application that you are who you claim to be . In a real world example, this would be providing your ID or passport to the police when they pull you over to identify yourself. Authentication is not part of the standard OAuth 2 specification. However, there is an extension to the specification called Open ID Connect that handles this topic. Authorization Authorization is the flip side of authentication. Once a user has proven who they are, the application needs to figure out what a user is allowed to do . That’s essentially what the authorization process does. An easy way to think about this is the following example. If you are a teacher at a school, you can access information about the students in your class. However, if you are the principal of the school you probably have access to the records of all the students in the school. You have a larger access because of your job title. OAuth 2 Roles To fully understand OAuth 2, you have to be aware of the following 4 actors that make up the specification: Resource Owner Resource Server Authorization Server Client / Application As before, let’s explain it with a very basic example to see how it actually works. Let’s say you have a jacket. Since you own that jacket, you are the Resource Owner and the jacket is the Resource you want to protect. You want to store the jacket in a locker to keep it safe. The locker will act as the Resource Server . You don’t own the Resource Server but it’s holding on to your things for you. Since you want to keep the jacket safe from being stolen by someone else, you have to put a lock on the locker. That lock will be the Authorization Server . It handles the security aspects and makes sure that only you are able to access the jacket or potentially someone else that you give permission. If you want your friend to retrieve your jacket out of the locker, that friend can be seen as the Client or Application actor in the OAuth flow. The Client is always acting on the user’s behalf. Tokens The next concept that you’re going to hear about a lot is tokens.There are various types of tokens, but all of them are very straightforward to understand. The 2 types of tokens that you encounter the most are access tokens and refresh tokens . When it comes to access tokens you might have heard about JWT tokens, bearer tokens or opaque tokens. Those are really just implementation details that I’m not going to cover in this article. In essence, an access token is something you provide to the resource server in order to get access to the items it is holding for you. For example, you can see access tokens as paper tickets you buy at the carnival. When you want to get on a ride, you present your ticket to the person in the booth and they’ll let you on. You enjoy your ride and afterwards your ticket expires. Important to note is that whoever has the token, owns the token . So be very careful with them. If someone else gets a hold on your token, he or she can access your items on your behalf! Refresh tokens are very similar to access tokens. Essentially, you use them to get more access tokens. While access tokens are typically short lived, refresh tokens tend to have a longer expiry date. To go back to our carnival example, a refresh token could be your parents credit card that can be used to buy more carnival tickets for you to spend on rides. Scopes The next concept to cover are scopes. A scope is basically a description of things that a person can do in an application. You can see it as a job role in real life (e.g a principal or teacher in a high school). Certain scopes can grant you more permissions than others. I know I said I wasn’t going to get into technical details, but if you’re familiar with Spring Security, then you can compare scopes with what Spring Security calls roles. A scope matches one-on-one with the concept of a role. The OAuth specification does not specify how a scope should look like but often they are dot separated Strings like blog.write . Google on the other hand uses URLs as a scope. As an example: to allow read only access to someone’s calendar, they will provide the scope https://www.googleapis.com/auth/calendar.readonly . Grant types Grant types are typically where things start to get confusing for people. Let’s first start with showing the most common used grant types: Client Credentials Authorization Code Device Code Refresh Password Implicit Client Credentials is a grant type used very frequently when 2 back-end services need to communicate with each other in a secure way. The next one is the Authorization Code grant type, which is probably the most difficult grant type to fully grap. You use this grant type whenever you want users to login via a browser based login form. If you have ever used the ‘Log in with Facebook’ or ‘Log in with Google’ button on a website, then you’ve already experienced an Authorization Code flow without even knowing it! Next up is the Device Code grant type, which is fairly new in the OAuth 2 scene. It’s typically used on devices that have limited input capabilities, like a TV. For example, if you want to log in to Netflix, instead of providing your username and password; it will pop-up a link that displays a code, which you have to fill in using the mobile app. The Refresh grant type most often goes hand in hand with the Authorization Code flow. Since access tokens are short lived, you don’t want your users to be bothered with logging in each time the access token expires. So there’s this refresh flow that utilizes refresh tokens to acquire new access tokens whenever they’re about to expire. The last 2 grant types are Password and Implicit . These grant types are less secure options that are not recommended when building new applications. We’ll touch on them briefly in the next section, which explains the above grant types in more detail. Authorization flows An authorization flow contains one or more steps that have to be executed in order for a user to get authorized by the system. There are 4 authorization flows we’ll discuss: Client Credentials flow Password flow Authorization Code flow Implicit flow Client Credentials flow The Client Credentials flow is the simplest flow to implement. It works very similar to how a traditional username/password login works. Use this flow only if you can trust the client/application, as the client credentials are stored within the application. Don’t use this for single page apps (SPAs) or mobile apps, as malicious users can deconstruct the app to get ahold of the credentials and use them to get access to secured resources. In most use cases, this flow is used to communicate securely between 2 back-end systems. So how does the Client Credentials flow work? Each application has a client ID and secret that are registered on the authorization server. It presents those to the authorization server to get an access token and uses it to get the secure resource from the resource server. If at some point the access token expires, the same process repeats itself to get a new token. Password flow The Password flow is very similar to the Client Credentials flow, but is very insecure because there’s a 3rd actor involved being an actual end user. Instead of a secure client that we trust presenting an ID and secret to the authorization provider, we now have a user ‘talking’ to a client. In a Password flow, the user provides their personal credentials to the client. The client then uses these credentials to get access tokens from the authorization server. This is the reason why a Password flow is not secure, as we must absolutely be sure that we can trust the client to not abuse the credentials for malicious reasons. Exceptions where this flow could still be used are command line applications or corporate websites where the end user has to trust the client apps that they use on a daily basis. But apart from this, it’s not recommended to implement this flow. Authorization Code Flow This is the flow that you definitely want to understand, as it’s the flow that’s used the most when securing applications with OAuth 2. This flow is a bit more complicated than the previously discussed flows. It’s important to understand that this flow is confidential, secure and browser based . The flow works by making a lot of HTTP redirects, which is why a browser is an important actor in this flow. There’s also a back-channel request (called like this because the user is not involved in this part of the flow) in which the client or application talks directly to the authorization server. In this flow, the user typically has to approve the scopes or permissions that will be granted to the application. An example could be a 3rd party application that asks if it’s allowed to have access to your Facebook profile picture after logging in with the ‘Log in with Facebook’ button. Let’s apply the Authorization Code flow to our ‘jacket in the closet’ example to get a better understanding. Our jacket is in the locker and we want to lend it to a friend. Our friend goes to the (high-tech) locker. The locker calls us, as we are the Resource Owner. This call is one of those redirects we talked about earlier. At this point, we establish a secure connection to the locker, which acts as an authorization server. We can now safely provide our credentials to give permission to unlock the lock. The authorization server then provides a temporary code called OAuth code to our friend. The friend then uses that OAuth code to obtain an access code to open the locker and get my jacket. Implicit flow The Implicit flow is basically the same as the Authorization Code flow, but without the temporary OAuth code. So after logging in, the authorization server will immediately send back an access token without requiring a back-channel request. This is less secure, as the token could be intercepted via a man-in-the-middle attack. Conclusion OAuth 2 may look daunting at first because of all the different actors involved. Hopefully, you now have a better understanding of how they interact with each other. With this knowledge in mind, it will be much easier to grasp the technical details once you start delving into them.

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!