You may well be familiar with the term ‘data mesh’. It is one of those buzzwords to do with data that have been doing the rounds for some time now. Even though data mesh has the potential to bring a lot of value for an organization in quite a few situations, we should not stare ourselves blind on all the fancy terminology. If you are looking to develop a proper data strategy, you do well to start off by asking yourselves the following questions: what is the challenge we are seeking to tackle with data? And how can a solution contribute to achieving our business goals?

There is certainly nothing new about organizations using data, but we have come a long way. Initially, companies gathered data from various systems in a data warehouse. The drawback being that the data management was handled by a central team and the turnaround time of reports was likely to seriously run up. Moreover, these data engineers needed to have a solid understanding of the entire business. Over the years that followed, the rise of social media meant the sheer amount of data positively mushroomed, which in turn led to the term Big Data. As a result, tools were developed to analyse huge data volumes, with the focus increasingly shifting towards self-service.

The latter trend now means that the business itself is increasingly better able to handle data under their own steam. Which in turn brings yet another new challenge: as is often the case, we are unable to dissociate technology from the processes at the company or from the people that use these data. Are these people ready to start using data? Do they have the right skills and have you thought about the kind of skills you will be needing tomorrow? What are the company’s goals and how can employees contribute towards achieving them? The human aspect is a crucial component of any potent data strategy.

How to make the difference with data?

In practice, the truth is that, when it comes to their data strategies, a lot of companies have not progressed from where they were a few years ago. Needless to say, this is hardly a robust foundation to move on to the next step. So let’s hone in on some of the key elements in any data strategy:

- Data need to incite action: it is not enough to just compare a few numbers; a high-quality report leads to a decision or should at the very least make it clear which kind of action is required.

- Sharing is caring: if you do have data anyway, why not share them? Not just with your own in-house departments, but also with the outside world. If you manage to make data available again to the customer there is a genuine competitive advantage to be had.

- Visualise: data are often collected in poorly organised tables without proper layout. Studies show the human brain struggles to read these kinds of tables. Visualising data (using GeoMapping for instance) may see you arrive at insights you had not previously thought of.

- Connect data sets: in the case of data sets, at all times 1+1 needs to equal 3. If you are measuring the efficacy of a marketing campaign, for example, do not just look at the number of clicks. The real added value resides in correlating the data you have with data about the business, such as (increased) sales figures.

- Make data transparent: be clear about your business goals and KPIs, so everybody in the organization is able to use the data and, in doing so, contribute to meeting a benchmark.

- Train people: make sure your people understand how to use technology, but also how data are able to simplify their duties and how data contribute to achieving the company goals.

Which problem are you seeking to resolve with data?

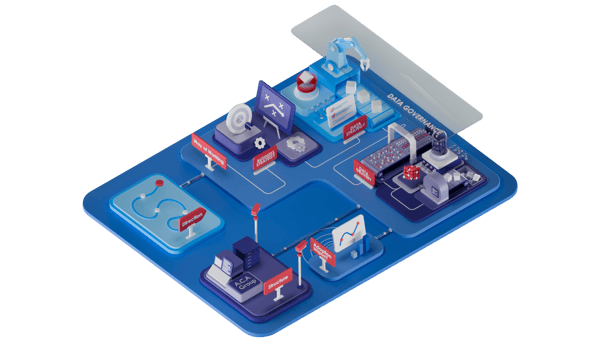

Once you have got the foundations right, we can work up a roadmap. No solution should ever set out from the data themselves, but at all times needs to be linked to a challenge or a goal. This is why ACA Group always organises a workshop first in order to establish what the customer’s goals are. Based on the outcome of this workshop, we come up with concrete problem definition, which sets us on the right track to find a solution for each situation.

The integration of data sets will gain even greater importance in the near future, in amongst other things as part of sustainability reporting. In order to prepare and guide companies as best as possible, over the course of this year, we will be digging deeper into some important terminologies, methods and challenges around data with a series of blogs.

If in the meantime, are you keen to find out exactly what ‘Data Mesh’ entails, and why this could be rewarding for your organization?

What others have also read

Over the last few years, digitization and innovation have had an enormous impact on the application landscape. A company’s application architecture used to be relatively simple, but that is no longer the case. Numerous cloud solutions, rented on a monthly basis, now complicate things to the point where it’s no longer obvious which data is kept where . Combine this trend with the shift towards self-service applications from a data consumption perspective and the impact on data architectures is inevitable. In this blog post, we’ll dive deeper into this (r)evolution in the world of data and the impact of a changing application landscape on your data architecture . Keeping an open data architecture ‘Data’ is a broad concept and includes an incredible amount of domains that all require specific knowledge or some sort of specialization. There are plenty of examples: data architecture, data visualization, data management, data security, GDPR, and so on. Over the years, many organizations have tried to get a grasp on all these different ‘data domains’. And this really isn’t a cakewalk, since innovative changes are taking place in each of these domains . Additionally, they often coincide with other and newer concepts such as AI, data science, machine learning, and others. In any case, it’s preferable to keep your vision and data architecture as ‘open’ as possible . This keeps the impact of future changes on your current implementation as low as possible. Denying such changes means slowing down innovation, possibly annoying your end-users and vastly increasing the chance of a huge additional cost a few years down the line when the need to revise your architecture can no longer be postponed. Modern applications complicate combining data The amount of data increases exponentially every year . Moreover, the new generation of end-users is used to being served at their beck and call. This is a trend that the current application landscape clearly supports. Within many applications, its software vendors offer data in real-time in an efficient, attractive and insightful way. Huge props to these vendors of course, but this poses additional difficulties for CIOs to deliver combined data to end-users. “What is the impact of a marketing campaign on the sale of a certain product?” Anwering a question like this poses a challenge for many organizations. The answer requires combining data from two (admittedly well-organized) applications. For example, Atlassian offers reporting features in Jira while Salesforce does the same with its well-known CRM platform. The reporting features in both of these software packages are actually very detailed and allow you to create powerful reports. However, it’s difficult to combine this data into one single report. Moreover, besides well-structured Marketing and Sales domains, a question like that requires an overarching technical and organizational alignment. Which domain has the responsibility or the mandate to answer such a question? Is there any budget available? What about resources? And which domain will bear these costs? Does Self-Service BI offer a solution? In an attempt to answer such questions, solutions such as Self-Service BI introduced themselves to the market. These tools are able to simply combine data and provide insight their users might not have thought of yet. The only requirement is that these tools need access to the data in question. Sounds simple enough, right? Self-Service BI tools have boomed the past few years, with Microsoft setting the example with its Power-BI. By making visualizations and intuitive ‘self-service data loaders’ a key component, they were able to convince the ‘business’ to invest. But this creates a certain tension between the business users of these tools and CIOs . The latter slowly lose their grip on their own IT landscape, since a Self-Service BI approach may also spawn a lot of ‘shadow-BI’ initiatives in the background. For example, someone may have been using Google Data Studio on their own initiative without the CIO knowing, while that CIO is trying to standardize a toolset using Power-BI. Conclusion: tons of data duplication, security infringement and then we haven’t even talked about GDPR compliance yet. Which other solutions are there? The standard insights and analytics reports within applications are old news, and the demand for real-time analytics, also known as streaming analytics, is rising. For example, during online shopping, stores display their actual stock of a product on the product page itself. Pretty run-of-the-mill, right? So why is it then so hard to answer the question regarding the impact of my marketing campaign on my sales in a report? The demands and needs for data are changing. Who is the owner of which data and who determines its uses? Does historical data disappear if it’s not stored in a data warehouse? If the data is still available within the application where it was initially created, how long will it still remain there? Storing the data in a data lake or data repository is a possible cheap(er) solution. However, this data is not or hardly organized, making it difficult to use it for things like management reporting. Perhaps offloading this data to a data warehouse is the best solution? Well-structured data, easily combined with data from other domains and therefore an ideal basis for further analysis. But… the information is not available in real-time and this solution can get pretty costly. Which solution best fits your requirements? Takeaway As you’ve noticed by now, it’s easy to sum up a ton of questions and challenges regarding the structuring of data within organizations. Some data-related questions require a quick answer, other more analytical or strategic questions don’t actually need real-time data. A data architecture that takes all these needs into account and is open to changes is a must. We believe in a data approach in which the domain owner is also the owner of the data and facilitates this data towards the rest of the organization. It’s the responsibility of the domain owner to organize their data in such a way that it can provide an answer to as many questions from the organization as possible. It’s possible that this person doesn’t have the necessary knowledge or skills within their team to organize all of this. Therefore, a new role within the organization is necessary to support domain owners with knowledge and resources: the role of a Chief Data Officer (CDO). They will orchestrate everything and anything in the organization when it comes to data and have the mandate to enforce general guidelines. Research shows that companies that have appointed a CDO are more successful when rolling out new data initiatives. ACA Group commits itself to guide its customers as best as possible in their data approach. It’s vital to have a clear vision, supported by a future-proof data architecture: an architecture open to change and innovation, not just from a technical perspective, but also when it comes to changing data consumption demands. A relevance to the new generation, and a challenge for most data architectures and organizations. {% module_block module "widget_ee7fe7f9-05fc-4bd8-b515-6bb400cb56b4" %}{% module_attribute "buttons" is_json="true" %}{% raw %}[{"appearance":{"link_color":"light","primary_color":"primary","secondary_color":"primary","tertiary_color":"light","tertiary_icon_accent_color":"dark","tertiary_text_color":"dark","variant":"primary"},"content":{"arrow":"right","icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"tertiary_icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"text":"Check out our data services"},"target":{"link":{"no_follow":false,"open_in_new_tab":false,"rel":"","sponsored":false,"url":{"content_id":null,"href":"https://acagroup.be/en/services/data/","href_with_scheme":"https://acagroup.be/en/services/data/","type":"EXTERNAL"},"user_generated_content":false}},"type":"normal"}]{% endraw %}{% end_module_attribute %}{% module_attribute "child_css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "definition_id" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "field_types" is_json="true" %}{% raw %}{"buttons":"group","styles":"group"}{% endraw %}{% end_module_attribute %}{% module_attribute "isJsModule" is_json="true" %}{% raw %}true{% endraw %}{% end_module_attribute %}{% module_attribute "label" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "module_id" is_json="true" %}{% raw %}201493994716{% endraw %}{% end_module_attribute %}{% module_attribute "path" is_json="true" %}{% raw %}"@projects/aca-group-project/aca-group-app/components/modules/ButtonGroup"{% endraw %}{% end_module_attribute %}{% module_attribute "schema_version" is_json="true" %}{% raw %}2{% endraw %}{% end_module_attribute %}{% module_attribute "smart_objects" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "smart_type" is_json="true" %}{% raw %}"NOT_SMART"{% endraw %}{% end_module_attribute %}{% module_attribute "tag" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "type" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "wrap_field_tag" is_json="true" %}{% raw %}"div"{% endraw %}{% end_module_attribute %}{% end_module_block %}

Read more

In May 2024, Microsoft’s announcement of Copilot for Power BI signaled a major shift in data analysis. This AI-powered tool lets users perform complex data tasks using conversational prompts, transforming how data is modeled, analyzed, and presented. But what does this mean for businesses, IT managers, and data analysts? Find out how Copilot integrates into Power BI, the range of tasks it can handle, and its broader implications for data analysts. While Copilot simplifies routine tasks, it also demands new skills and perspectives to fully realize its potential. Let’s dive deeper into this. What is Copilot for Power BI? Incorporating AI into Power BI Copilot introduces powerful AI-driven tools that automate and streamline tasks previously requiring advanced technical knowledge. Here’s a breakdown of Copilot’s main functions in Power BI: Summarize data models : Provides overviews of underlying semantic models. Suggest content for reports : Uses prompts to recommend relevant visuals and layouts. Generate visuals and report pages: Automates the creation of report elements. Answer data model questions : Responds to data queries within the model context. Write DAX queries: Generates DAX expressions, reducing the need for deep DAX expertise. Enhance Q A with synonyms and descriptions : Improves model usability by enabling natural language processing. These features enable quicker, easier data exploration and report creation. However, there’s more beneath the surface for data analysts to consider. Copilot: a junior-level assistant with limitations If we would look at Copilot as a colleague, think of it as a junior-level assistant, capable of helping with tasks such as generating reports, dashboards, and queries in Power BI. However, Copilot operates without domain-specific knowledge and tends to have a literal approach to tasks. It can efficiently follow instructions and generate outputs based on well-constructed prompts, but lacks the deep business context that human analysts bring to the table. Copilot doesn’t possess the ability to understand the nuances of a business problem or the industry-specific intricacies that often influence data insights. Yet 😉. While Copilot can automate some of the more routine and mechanical aspects of data analysis, such as building visuals or applying basic transformations, it still requires guidance and oversight. It’s up to the analyst to ensure that Copilot’s outputs are relevant, meaningful, and aligned with the organization's goals. Rather than replacing data analysts, Copilot elevates their roles, pushing them to focus on higher-level tasks which involve a high degree of critical thinking. Adopting Copilot for PowerBI: Costs and challenges Despite its promise, Copilot comes with a significant entry barrier for many organizations. As of now, using Copilot in Power BI minimally requires either an F64 Fabric capacity or a P1 Premium capacity, which is rather costly. Smaller organizations or those with limited budgets may not have immediate access, limiting its widespread adoption at the moment. For organizations that do invest in the necessary infrastructure, Copilot has the potential to speed up certain processes. However, the high cost of entry means that data analysts in these environments will need to demonstrate a clear return on investment. This makes it even more critical for analysts to focus on delivering high-value insights that directly impact business decisions, rather than simply generating reports. The impact of Copilot on the role of the data analyst Front-end development of Power BI reports and dashboards used to be a key responsibility of Power BI developers or the data analysts themselves. However, with Copilot, well-constructed prompts can lead to fully functional reports and dashboards, automating much of the manual work. This means that data analysts can significantly reduce the time spent on technical report building. The process of designing visuals, formatting reports, and creating dashboards will largely be handled by Copilot. While automation will save time, it will reshape the job of the data analyst: Shifting focus from dashboards to business value: Data analysts will prioritize delivering actionable insights over building dashboards, ensuring dashboards deliver insights that are actionable and easy for business stakeholders to understand. Translating problems into data solutions: Analysts must frame business problems as data questions, leveraging Copilot effectively. Strong business acumen and communication skills are essential for collaborating with leaders and ensuring insights address key challenges. Building robust and flexible semantic models: Copilot depends on well-structured models, making data modeling and metadata management essential. Analysts must create robust, flexible, and well-documented semantic models that support evolving business needs, focusing on long-term strategies and key metrics. Mastering data governance: To maximize Copilot’s value, data analysts have to make sure that the data is clean, reliable, and well-managed. High-quality data and strong metadata management are critical, as Copilot relies on these to generate effective outputs. Consider the following list of considerations Microsoft published for datasets being used with Copilot to guide you in the right direction. Conclusion: is Copilot for Power BI a must-have for your organization? Microsoft’s Copilot for Power BI is a game-changer, yet it emphasizes the need for analysts to evolve their skills beyond technical tasks. Analysts are being pushed to elevate their work, focusing on insight generation and strategic thinking. To learn more about what skills will be essential for data analysts in a Copilot-powered environment, read the article on “Essential skills for data analysts in the age of AI” . Curious about the impact of Copilot on your data team or need help with implementing it effectively? {% module_block module "widget_940af9b0-b1f5-43b5-9803-c344d01d992f" %}{% module_attribute "buttons" is_json="true" %}{% raw %}[{"appearance":{"link_color":"light","primary_color":"primary","secondary_color":"primary","tertiary_color":"light","tertiary_icon_accent_color":"dark","tertiary_text_color":"dark","variant":"primary"},"content":{"arrow":"right","icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"tertiary_icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"text":"Contact us!"},"target":{"link":{"no_follow":false,"open_in_new_tab":false,"rel":"","sponsored":false,"url":{"content_id":null,"href":"https://25145356.hs-sites-eu1.com/en/blog/how-copilot-in-power-bi-is-transforming-data-analysis-new-ai-tools-new-opportunities","href_with_scheme":"https://25145356.hs-sites-eu1.com/en/blog/how-copilot-in-power-bi-is-transforming-data-analysis-new-ai-tools-new-opportunities","type":"EXTERNAL"},"user_generated_content":false}},"type":"normal"}]{% endraw %}{% end_module_attribute %}{% module_attribute "child_css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "definition_id" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "field_types" is_json="true" %}{% raw %}{"buttons":"group","styles":"group"}{% endraw %}{% end_module_attribute %}{% module_attribute "isJsModule" is_json="true" %}{% raw %}true{% endraw %}{% end_module_attribute %}{% module_attribute "label" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "module_id" is_json="true" %}{% raw %}201493994716{% endraw %}{% end_module_attribute %}{% module_attribute "path" is_json="true" %}{% raw %}"@projects/aca-group-project/aca-group-app/components/modules/ButtonGroup"{% endraw %}{% end_module_attribute %}{% module_attribute "schema_version" is_json="true" %}{% raw %}2{% endraw %}{% end_module_attribute %}{% module_attribute "smart_objects" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "smart_type" is_json="true" %}{% raw %}"NOT_SMART"{% endraw %}{% end_module_attribute %}{% module_attribute "tag" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "type" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "wrap_field_tag" is_json="true" %}{% raw %}"div"{% endraw %}{% end_module_attribute %}{% end_module_block %}

Read more

In the ever-evolving landscape of data management, investing in platforms and navigating migrations between them is a recurring theme in many data strategies. How can we ensure that these investments remain relevant and can evolve over time, avoiding endless migration projects? The answer lies in embracing ‘Composability’ - a key principle for designing robust, future-proof data (mesh) platforms. Is there a silver bullet we can buy off-the-shelf? The data-solution market is flooded with data vendor tools positioning themselves as the platform for everything, as the all-in-one silver bullet. It's important to know that there is no silver bullet. While opting for a single off-the-shelf platform might seem like a quick and easy solution at first, it can lead to problems down the line. These monolithic off-the-shelf platforms often end up inflexible to support all use cases, not customizable enough, and eventually become outdated.This results in big complicated migration projects to the next silver bullet platform, and organizations ending up with multiple all-in-one platforms, causing disruptions in day-to-day operations and hindering overall progress. Flexibility is key to your data mesh platform architecture A complete data platform must address numerous aspects: data storage, query engines, security, data access, discovery, observability, governance, developer experience, automation, a marketplace, data quality, etc. Some vendors claim their all-in-one data solution can tackle all of these. However, typically such a platform excels in certain aspects, but falls short in others. For example, a platform might offer a high-end query engine, but lack depth in features of the data marketplace included in their solution. To future-proof your platform, it must incorporate the best tools for each aspect and evolve as new technologies emerge. Today's cutting-edge solutions can be outdated tomorrow, so flexibility and evolvability are essential for your data mesh platform architecture. Embrace composability: Engineer your future Rather than locking into one single tool, aim to build a platform with composability at its core. Picture a platform where different technologies and tools can be seamlessly integrated, replaced, or evolved, with an integrated and automated self-service experience on top. A platform that is both generic at its core and flexible enough to accommodate the ever-changing landscape of data solutions and requirements. A platform with a long-term return on investment by allowing you to expand capabilities incrementally, avoiding costly, large-scale migrations. Composability enables you to continually adapt your platform capabilities by adding new technologies under the umbrella of one stable core platform layer. Two key ingredients of composability Building blocks: These are the individual components that make up your platform. Interoperability: All building blocks must work together seamlessly to create a cohesive system. An ecosystem of building blocks When building composable data platforms, the key lies in sourcing the right building blocks. But where do we get these? Traditional monolithic data platforms aim to solve all problems in one package, but this stifles the flexibility that composability demands. Instead, vendors should focus on decomposing these platforms into specialized, cost-effective components that excel at addressing specific challenges. By offering targeted solutions as building blocks, they empower organizations to assemble a data platform tailored to their unique needs. In addition to vendor solutions, open-source data technologies also offer a wealth of building blocks. It should be possible to combine both vendor-specific and open-source tools into a data platform tailored to your needs. This approach enhances agility, fosters innovation, and allows for continuous evolution by integrating the latest and most relevant technologies. Standardization as glue between building blocks To create a truly composable ecosystem, the building blocks must be able to work together, i.e. interoperability. This is where standards come into play, enabling seamless integration between data platform building blocks. Standardization ensures that different tools can operate in harmony, offering a flexible, interoperable platform. Imagine a standard for data access management that allows seamless integration across various components. It would enable an access management building block to list data products and grant access uniformly. Simultaneously, it would allow data storage and serving building blocks to integrate their data and permission models, ensuring that any access management solution can be effortlessly composed with them. This creates a flexible ecosystem where data access is consistently managed across different systems. The discovery of data products in a catalog or marketplace can be greatly enhanced by adopting a standard specification for data products. With this standard, each data product can be made discoverable in a generic way. When data catalogs or marketplaces adopt this standard, it provides the flexibility to choose and integrate any catalog or marketplace building block into your platform, fostering a more adaptable and interoperable data ecosystem. A data contract standard allows data products to specify their quality checks, SLOs, and SLAs in a generic format, enabling smooth integration of data quality tools with any data product. It enables you to combine the best solutions for ensuring data reliability across different platforms. Widely accepted standards are key to ensuring interoperability through agreed-upon APIs, SPIs, contracts, and plugin mechanisms. In essence, standards act as the glue that binds a composable data ecosystem. A strong belief in evolutionary architectures At ACA Group, we firmly believe in evolutionary architectures and platform engineering, principles that seamlessly extend to data mesh platforms. It's not about locking yourself into a rigid structure but creating an ecosystem that can evolve, staying at the forefront of innovation. That’s where composability comes in. Do you want a data platform that not only meets your current needs but also paves the way for the challenges and opportunities of tomorrow? Let’s engineer it together Ready to learn more about composability in data mesh solutions? {% module_block module "widget_f1f5c870-47cf-4a61-9810-b273e8d58226" %}{% module_attribute "buttons" is_json="true" %}{% raw %}[{"appearance":{"link_color":"light","primary_color":"primary","secondary_color":"primary","tertiary_color":"light","tertiary_icon_accent_color":"dark","tertiary_text_color":"dark","variant":"primary"},"content":{"arrow":"right","icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"tertiary_icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"text":"Contact us now!"},"target":{"link":{"no_follow":false,"open_in_new_tab":false,"rel":"","sponsored":false,"url":{"content_id":230950468795,"href":"https://25145356.hs-sites-eu1.com/en/contact","href_with_scheme":null,"type":"CONTENT"},"user_generated_content":false}},"type":"normal"}]{% endraw %}{% end_module_attribute %}{% module_attribute "child_css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "definition_id" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "field_types" is_json="true" %}{% raw %}{"buttons":"group","styles":"group"}{% endraw %}{% end_module_attribute %}{% module_attribute "isJsModule" is_json="true" %}{% raw %}true{% endraw %}{% end_module_attribute %}{% module_attribute "label" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "module_id" is_json="true" %}{% raw %}201493994716{% endraw %}{% end_module_attribute %}{% module_attribute "path" is_json="true" %}{% raw %}"@projects/aca-group-project/aca-group-app/components/modules/ButtonGroup"{% endraw %}{% end_module_attribute %}{% module_attribute "schema_version" is_json="true" %}{% raw %}2{% endraw %}{% end_module_attribute %}{% module_attribute "smart_objects" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "smart_type" is_json="true" %}{% raw %}"NOT_SMART"{% endraw %}{% end_module_attribute %}{% module_attribute "tag" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "type" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "wrap_field_tag" is_json="true" %}{% raw %}"div"{% endraw %}{% end_module_attribute %}{% end_module_block %}

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!