Requirements

To implement this alternative approach, there are a few requirements that must be met:

- You must be deploying the container in a Kubernetes cluster.

- The application must support adding JVM parameters via an environment variable.

Starting Point

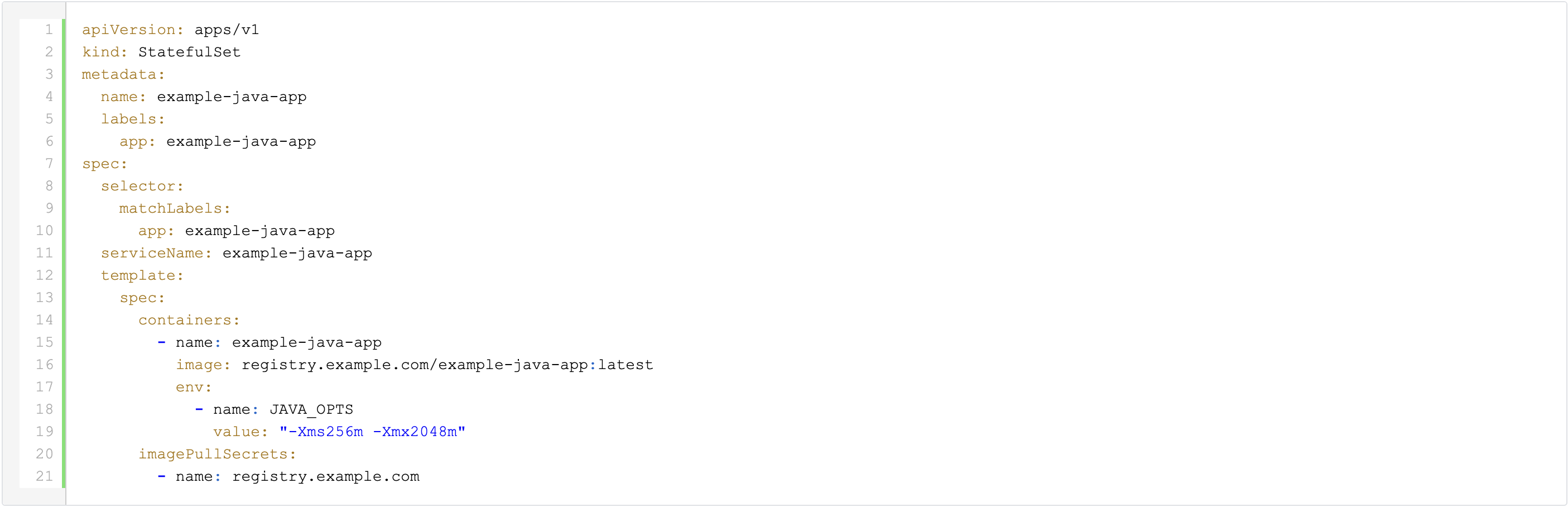

Let's assume that your application is using a StatefulSet that looks something like this:

The name of the JAVA_OPTS environment variable may vary depending on the specific application.

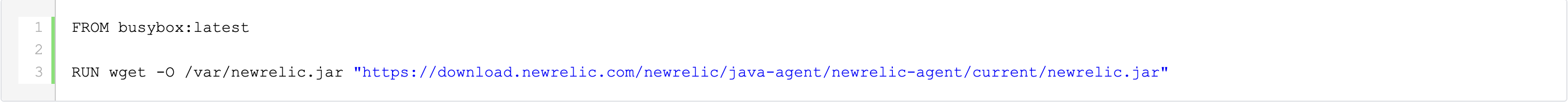

Packaging the New Relic agent jar

To simplify the deployment process, we will create a custom image which contains the New Relic agent jar. To create this image, we will use a Container File, which might look something like this:

"Injecting" the New Relic agent into the application container

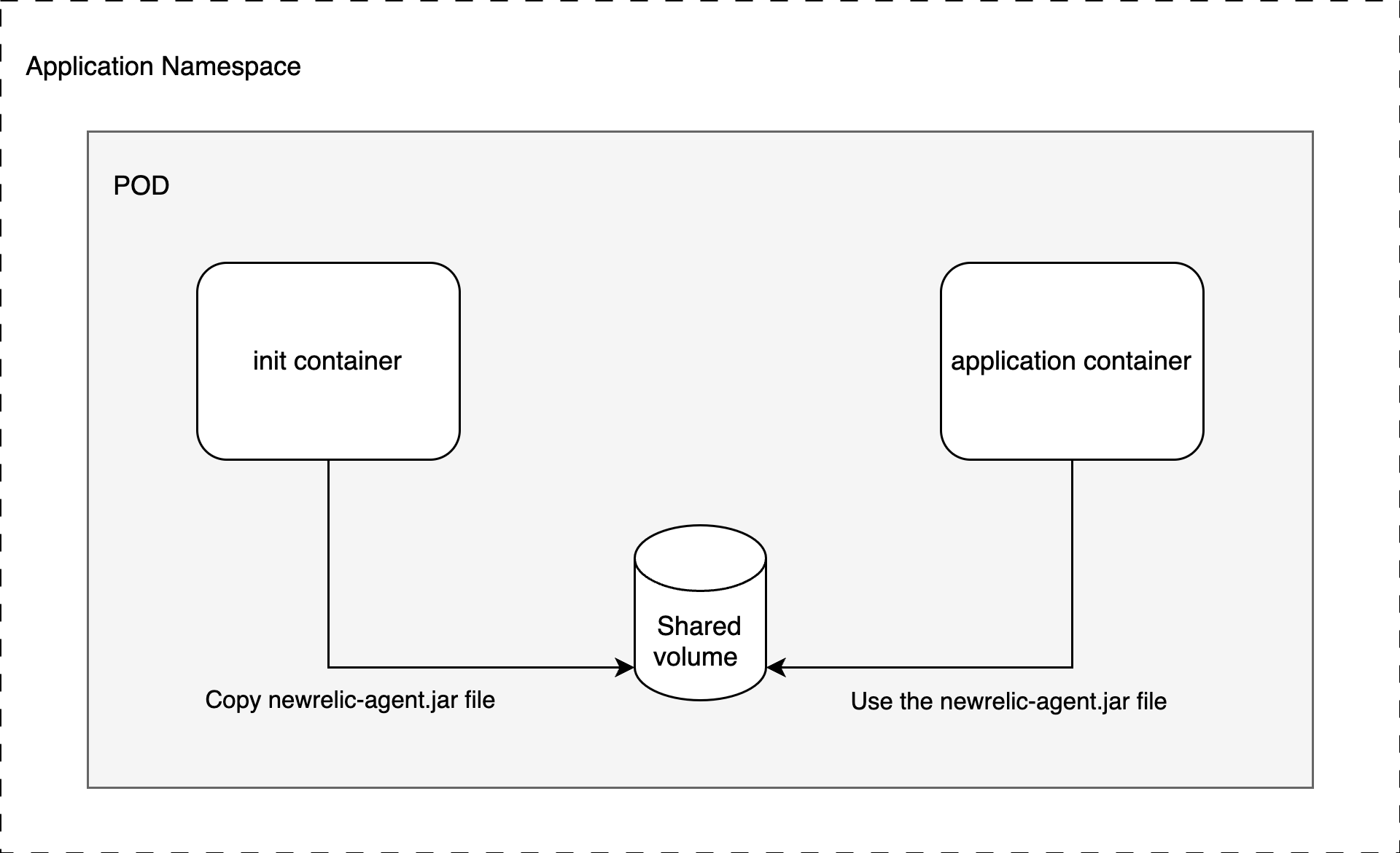

In order to direct the application to use the New Relic agent jar, we will utilize the Kubernetes Init Container and emptyDir concepts.

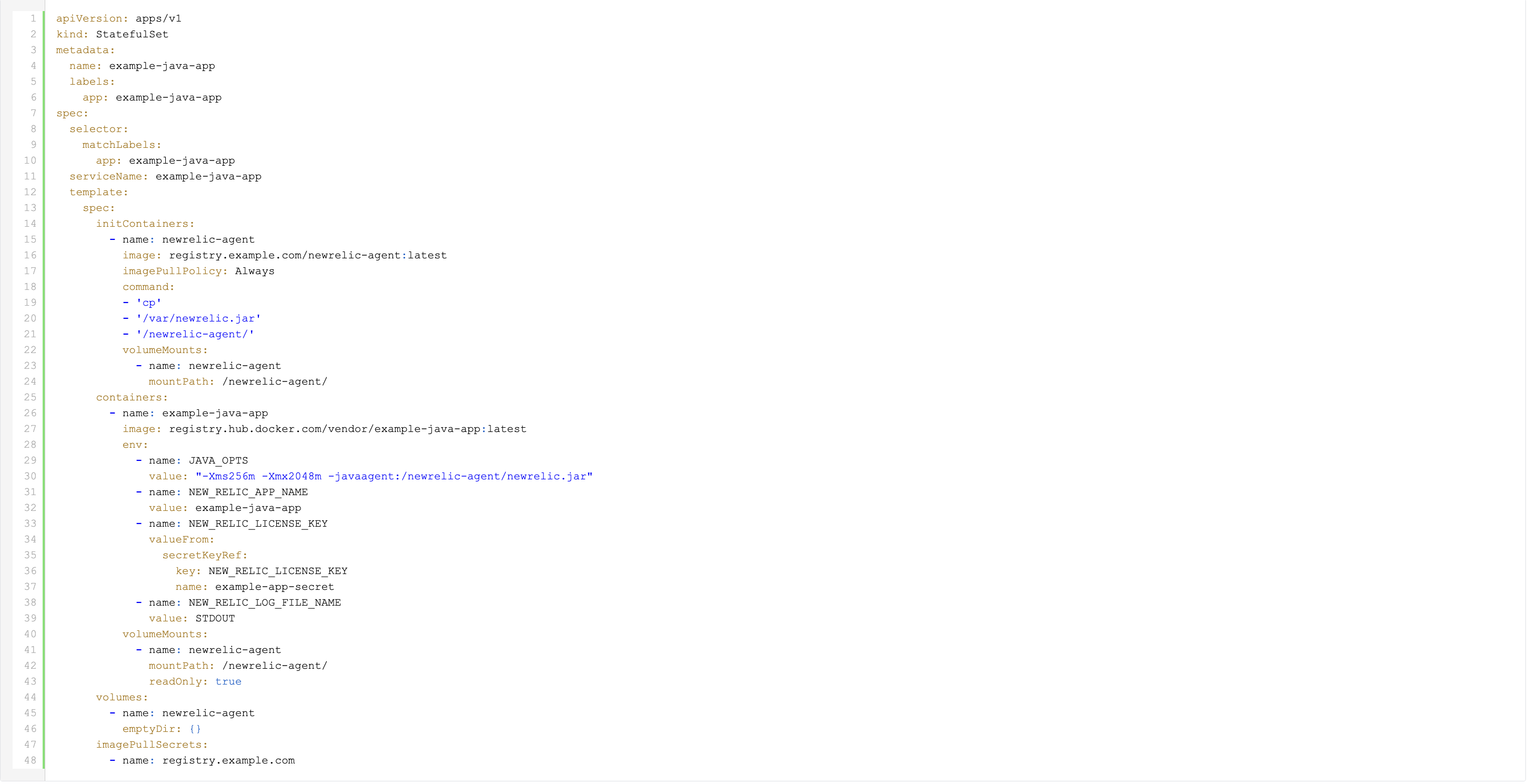

The resulting StatefulSet will look like this:

Instead of including the New Relic agent jar in the application image, we use our custom newrelic-agent image as an Init Container. This container copies the newrelic.jar file to an emptyDir volume.

Since the Init Container runs before the regular application container and both share the same volume, the newrelic.jar file is accessible when the JVM starts.

Apart from the shared volume, we need a few New Relic agent specific environment variables:

- NEW_RELIC_APP_NAME: to set the application name

- NEW_RELIC_LICENSE_KEY: to provide the license key

- NEW_RELIC_LOG_FILE_NAME: to optionally set a non-default log location, in this case STDOUT

There are a few additional environment variables available in the New Relic Java agent documentation that can be used.

In addition to this basic configuration, you can use the New Relic server-side agent configuration.

As you can see in the JAVA_OPTS environment variable, we still need to instruct the JVM to use the New Relic agent jar. It’s worth mentioning that this particular file is located on the shared volume.

Also note the "imagePullPolicy" which we have set to "Always" to ensure we always retrieve the latest image version even if it is still using the same tag.

Updating the New Relic agent

To ensure that the New Relic agent is always up-to-date, you can set up a Continuous Integration pipeline that automatically builds a new container image whenever a new agent version becomes available.

Additionally, you can tag the image with major, major.minor, and major.minor.patch, in addition to "latest". This allows you to pin the agent version based on the specific requirements of your application.

Depending on the image tag specified in the application's manifest file, updating could be as easy as restarting the application pods.

If you prefer to pin the New Relic agent to a specific version, you will need to update the newrelic-agent image tag in the StatefulSet and deploy this change to your Kubernetes cluster.

In any case, you no longer need to build a custom image just to add the New Relic agent jar, which should allow for more frequent updates to the agent.

Diagram

Do you want to discover more about Kubernetes?

What others have also read

In the complex world of modern software development, companies are faced with the challenge of seamlessly integrating diverse applications developed and managed by different teams. An invaluable asset in overcoming this challenge is the Service Mesh. In this blog article, we delve into Istio Service Mesh and explore why investing in a Service Mesh like Istio is a smart move." What is Service Mesh? A service mesh is a software layer responsible for all communication between applications, referred to as services in this context. It introduces new functionalities to manage the interaction between services, such as monitoring, logging, tracing, and traffic control. A service mesh operates independently of the code of each individual service, enabling it to operate across network boundaries and collaborate with various management systems. Thanks to a service mesh, developers can focus on building application features without worrying about the complexity of the underlying communication infrastructure. Istio Service Mesh in Practice Consider managing a large cluster that runs multiple applications developed and maintained by different teams, each with diverse dependencies like ElasticSearch or Kafka. Over time, this results in a complex ecosystem of applications and containers, overseen by various teams. The environment becomes so intricate that administrators find it increasingly difficult to maintain a clear overview. This leads to a series of pertinent questions: What is the architecture like? Which applications interact with each other? How is the traffic managed? Moreover, there are specific challenges that must be addressed for each individual application: Handling login processes Implementing robust security measures Managing network traffic directed towards the application ... A Service Mesh, such as Istio, offers a solution to these challenges. Istio acts as a proxy between the various applications (services) in the cluster, with each request passing through a component of Istio. How Does Istio Service Mesh Work? Istio introduces a sidecar proxy for each service in the microservices ecosystem. This sidecar proxy manages all incoming and outgoing traffic for the service. Additionally, Istio adds components that handle the incoming and outgoing traffic of the cluster. Istio's control plane enables you to define policies for traffic management, security, and monitoring, which are then applied to the added components. For a deeper understanding of Istio Service Mesh functionality, our blog article, "Installing Istio Service Mesh: A Comprehensive Step-by-Step Guide" , provides a detailed, step-by-step explanation of the installation and utilization of Istio. Why Istio Service Mesh? Traffic Management: Istio enables detailed traffic management, allowing developers to easily route, distribute, and control traffic between different versions of their services. Security: Istio provides a robust security layer with features such as traffic encryption using its own certificates, Role-Based Access Control (RBAC), and capabilities for implementing authentication and authorization policies. Observability: Through built-in instrumentation, Istio offers deep observability with tools for monitoring, logging, and distributed tracing. This allows IT teams to analyze the performance of services and quickly detect issues. Simplified Communication: Istio removes the complexity of service communication from application developers, allowing them to focus on building application features. Is Istio Suitable for Your Setup? While the benefits are clear, it is essential to consider whether the additional complexity of Istio aligns with your specific setup. Firstly, a sidecar container is required for each deployed service, potentially leading to undesired memory and CPU overhead. Additionally, your team may lack the specialized knowledge required for Istio. If you are considering the adoption of Istio Service Mesh, seek guidance from specialists with expertise. Feel free to ask our experts for assistance. More Information about Istio Istio Service Mesh is a technological game-changer for IT professionals aiming for advanced control, security, and observability in their microservices architecture. Istio simplifies and secures communication between services, allowing IT teams to focus on building reliable and scalable applications. Need quick answers to all your questions about Istio Service Mesh? Contact our experts

Read more

Liferay DXP has become a widely adopted portal platform for building and managing advanced digital experiences over recent years. Organizations use it for intranets, customer portals, self-service platforms, and more. While Liferay DXP is known for its user-friendliness, its default search functionality can be further optimized to meet modern user expectations. To address this, ACA developed an advanced solution that significantly enhances Liferay’s standard search capabilities. Learn all about it in this blog. Searching in Liferay: not always efficient Traditionally, organizational searches relied on individual keywords . For example, intranet users would search terms like "leave" or "reimbursement" to find the information they needed. This often resulted in an overload of results and documents , leaving users to sift through them manually to find relevant information—a time-consuming and inefficient process that hampers the user experience. The way users search had changed The rise of AI tools like ChatGPT has transformed how people search for information. This is also visible in online search engines like Google, where users increasingly phrase their queries as complete questions. For example: “How do I apply for leave?” or “What travel reimbursement am I entitled to?” To meet these evolving search needs, search functionality must not only be fast but also capable of understanding natural language. Unfortunately, Liferay’s standard search falls short in this area. ACA develops advanced AI-powered search for Liferay To accommodate today’s search behavior, ACA has created an advanced solution for Liferay DXP 7.4 installations: Liferay AI Search . Leveraging the GPT-4o language model , we’ve succeeded in significantly improving Liferay’s standard search capabilities. GPT-4o is a state-of-the-art language model trained on an extensive dataset of textual information. By integrating GPT-4o into our solution, we’ve customized search algorithms to handle more complex queries , including natural language questions. How does Liferay AI Search work? Closed dataset The AI model only accesses data from within the closed Liferay environment. This ensures that only relevant documents— such as those from the Library and Media Library—are accessible to the model. Administrators controls Administrators can decide which content is included in the GPT-4o dataset, allowing them to further optimize the accuracy and relevance of search results. Depending on the user’s profile, the answers and search results are tailored to the information they are authorized to access. Direct answers Thanks to GPT-4o integration, the search functionality provides not only traditional results but also direct answers to user queries. This eliminates the need for users to dig through search results to find the specific information they need. The comparison below illustrates the difference between search results from Liferay DXP’s standard search and the enhanced results from ACA’s Liferay AI Search. Want to see Liferay AI Search in action? Check out the demo below or via this link! Be nefits of Liferay AI Search Whether you use Liferay DXP for your customer platform or intranet, Liferay AI Search offers numerous advantages for your organization: Increased user satisfaction: Users can quickly find precise answers to their queries. Improved productivity: Less time is spent searching for information. Enhanced knowledge sharing: Important information is easier to locate and share. Conclusion With Liferay AI Search, ACA elevates Liferay DXP’s search functionality to meet modern user expectations. By integrating GPT-4o into Liferay DXP 7.4, this solution delivers not only traditional search results but also direct, relevant answers to complex, natural language queries. This leads to a faster, more user-friendly, and efficient search experience that significantly boosts both productivity and user satisfaction. Ready to optimize your Liferay platform search functionality Contact us today!

Read more

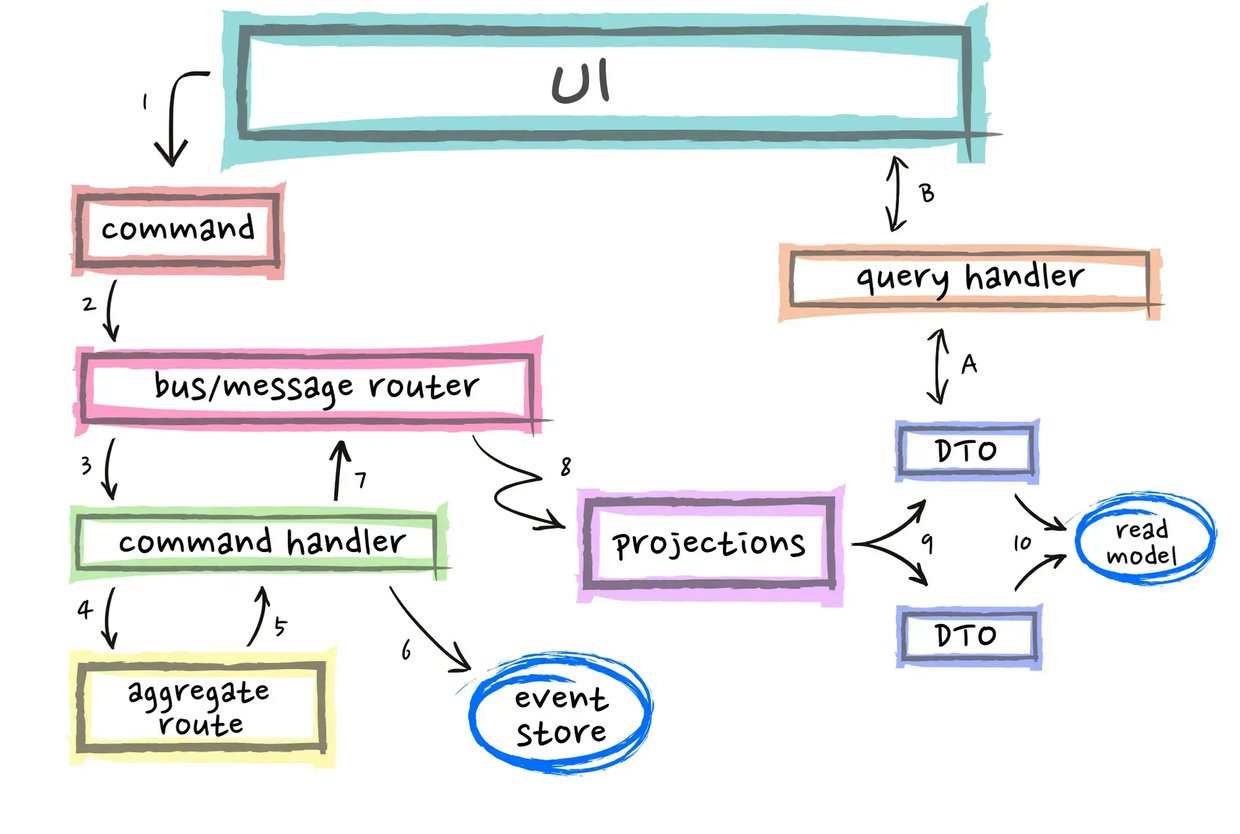

Staying current with the latest trends and best practices is crucial in the rapidly evolving world of software development. Innovative approaches like EventSourcing and CQRS can enable developers to build flexible, scalable, and secure systems. At Domain-Driven Design (DDD) Europe 2022 , Paolo Banfi delivered an enlightening talk on these two techniques. What is EventSourcing? EventSourcing is an innovative approach to data storage that prioritises the historical context of an object. Rather than just capturing the present state of an object, EventSourcing stores all the events that led to that state. Creating a well-designed event model is critical when implementing EventSourcing. The event model defines the events that will be stored and how they will be structured. Careful planning of the event model is crucial because it affects the ease of data analysis. Modifying the event model after implementation can be tough, so it's important to get it right from the beginning. What is CQRS CQRS (Command Query Responsibility Segregation) is a technique that separates read and write operations in a system to improve efficiency and understandability. In a traditional architecture, an application typically interacts with a database using a single interface. However, CQRS separates the read and write operations, each of which is handled by different components. Combining EventSourcing and CQRS One of the advantages of combining EventSourcing and CQRS is that it facilitates change tracking and data auditing. By keeping track of all the events that led to a particular state, it's easier to track changes over time. This can be particularly useful for applications that require auditing or regulation. Moreover, separating read and write operations in this way provides several benefits. Firstly, it optimises the system by reducing contention and improving scalability. Secondly, it simplifies the system by isolating the concerns of each side. Finally, it enhances the security of sensitive data by limiting access to the write side of the system. Another significant advantage of implementing CQRS is the elimination of the need to traverse the entire event stream to determine the current state. By separating read and write operations, the read side of the system can maintain dedicated models optimised for querying and retrieving specific data views. As a result, when querying the system for the latest state, there is no longer a requirement to traverse the entire event stream. Instead, the optimised read models can efficiently provide the necessary data, leading to improved performance and reduced latency. When to use EventSourcind and CQRS It's important to note that EventSourcing and CQRS may not be suitable for every project. Implementing EventSourcing and CQRS can require more work upfront compared to traditional approaches. Developers need to invest time in understanding and implementing these approaches effectively. However, for systems that demand high scalability, flexibility or security, EventSourcing and CQRS can provide an excellent solution. Deciding whether to use CQRS or EventSourcing for your application depends on various factors, such as the complexity of your domain model, the scalability requirements, and the need for a comprehensive audit trail of system events. Developers must evaluate the specific needs of their project before deciding whether to use these approaches. CQRS is particularly useful for applications with complex domain models that require different data views for different use cases. By separating the read and write operations into distinct models, you can optimise the read operations for performance and scalability, while still maintaining a single source of truth for the data. Event Sourcing is ideal when you need to maintain a complete and accurate record of all changes to your system over time. By capturing every event as it occurs and storing it in an append-only log, you can create an immutable audit trail that can be used for debugging, compliance, and other purposes. Conclusion The combination of EventSourcing and CQRS can provide developers with significant benefits, such as increased flexibility, scalability and security. They offer a fresh approach to software development that can help developers create applications that are more in line with the needs of modern organisations. If you're interested in learning more about EventSourcing and CQRS, there are plenty of excellent resources available online. Conferences and talks like DDD Europe are also excellent opportunities to stay up-to-date on the latest trends and best practices in software development. Make sure not to miss out on these opportunities if you want to stay ahead of the game! The next edition of Domain-Driven Design Europe will take place in Amsterdam from the 5th to the 9th of June 2023. Did you know that ACA Group is one of the proud sponsors of DDD Europe? {% module_block module "widget_bc90125a-7f60-4a63-bddb-c60cc6f4ee41" %}{% module_attribute "buttons" is_json="true" %}{% raw %}[{"appearance":{"link_color":"light","primary_color":"primary","secondary_color":"primary","tertiary_color":"light","tertiary_icon_accent_color":"dark","tertiary_text_color":"dark","variant":"primary"},"content":{"arrow":"right","icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"tertiary_icon":{"alt":null,"height":null,"loading":"disabled","size_type":null,"src":"","width":null},"text":"More about ACA Group"},"target":{"link":{"no_follow":false,"open_in_new_tab":false,"rel":"","sponsored":false,"url":{"content_id":null,"href":"https://acagroup.be/en/aca-as-a-company/","href_with_scheme":"https://acagroup.be/en/aca-as-a-company/","type":"EXTERNAL"},"user_generated_content":false}},"type":"normal"}]{% endraw %}{% end_module_attribute %}{% module_attribute "child_css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "css" is_json="true" %}{% raw %}{}{% endraw %}{% end_module_attribute %}{% module_attribute "definition_id" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "field_types" is_json="true" %}{% raw %}{"buttons":"group","styles":"group"}{% endraw %}{% end_module_attribute %}{% module_attribute "isJsModule" is_json="true" %}{% raw %}true{% endraw %}{% end_module_attribute %}{% module_attribute "label" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "module_id" is_json="true" %}{% raw %}201493994716{% endraw %}{% end_module_attribute %}{% module_attribute "path" is_json="true" %}{% raw %}"@projects/aca-group-project/aca-group-app/components/modules/ButtonGroup"{% endraw %}{% end_module_attribute %}{% module_attribute "schema_version" is_json="true" %}{% raw %}2{% endraw %}{% end_module_attribute %}{% module_attribute "smart_objects" is_json="true" %}{% raw %}null{% endraw %}{% end_module_attribute %}{% module_attribute "smart_type" is_json="true" %}{% raw %}"NOT_SMART"{% endraw %}{% end_module_attribute %}{% module_attribute "tag" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "type" is_json="true" %}{% raw %}"module"{% endraw %}{% end_module_attribute %}{% module_attribute "wrap_field_tag" is_json="true" %}{% raw %}"div"{% endraw %}{% end_module_attribute %}{% end_module_block %}

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!