At ACA, we live and breathe Kubernetes. We set up new projects with this popular container orchestration system by default, and we’re also migrating existing customers to Kubernetes. As a result, the amount of Kubernetes clusters the ACA team manages, is growing rapidly! We’ve had to change our setup multiple times to accommodate for more customers, more clusters, more load, less maintenance and so on.

From an Amazon ECS to a Kubernetes setup

In 2016, we had a lot of projects that were running in Docker containers. At that point in time, our Docker containers were either running in Amazon ECS or on Amazon EC2 Virtual Machines running the Docker daemon. Unfortunately, this setup required a lot of maintenance. We needed a tool that would give us a reliable way to run these containers in production. We longed for an orchestrator that would provide us high availability, automatic cleanup of old resources, automatic container scheduling and so much more.

→ Enter Kubernetes!

Kubernetes proved to be the perfect candidate for a container orchestration tool. It could reliably run containers in production and reduce the amount of maintenance required for our setup.

Creating a Kubernetes-minded approach

Agile as we are, we proposed the idea for a Kubernetes setup for one of our next projects. The customer saw the potential of our new approach and agreed to be part of the revolution. At the beginning of 2017, we created our first very own Kubernetes cluster. At this stage, there were only two certainties: we wanted to run Kubernetes and it would run on AWS. Apart from that, there were still a lot of questions and challenges.

- How would we set up and manage our cluster?

- Can we run our existing docker containers within the cluster?

- What type of access and information can we provide the development teams?

We’ve learned that in the end, the hardest task was not the cluster setup. Instead, creating a new mindset within ACA Group to accept this new approach, and involving the development teams in our next-gen Kubernetes setup proved to be the harder task at hand. Apart from getting to know the product ourselves and getting other teams involved as well, we also had some other tasks that required our attention:

- we needed to dockerize every application,

- we needed to be able to setup applications in the Kubernetes cluster that were high available and if possible also self-healing,

- and clustered applications needed to be able to share their state using the available methods within the selected container network interface.

Getting used to this new way of doing things in combination with other tasks, like setting up good monitoring, having a centralized logging setup and deploying our applications in a consistent and maintainable way, proved to be quite challenging. Luckily, we were able to conquer these challenges and about half a year after we’d created our first Kubernetes cluster, our first production cluster went live (August 2017).

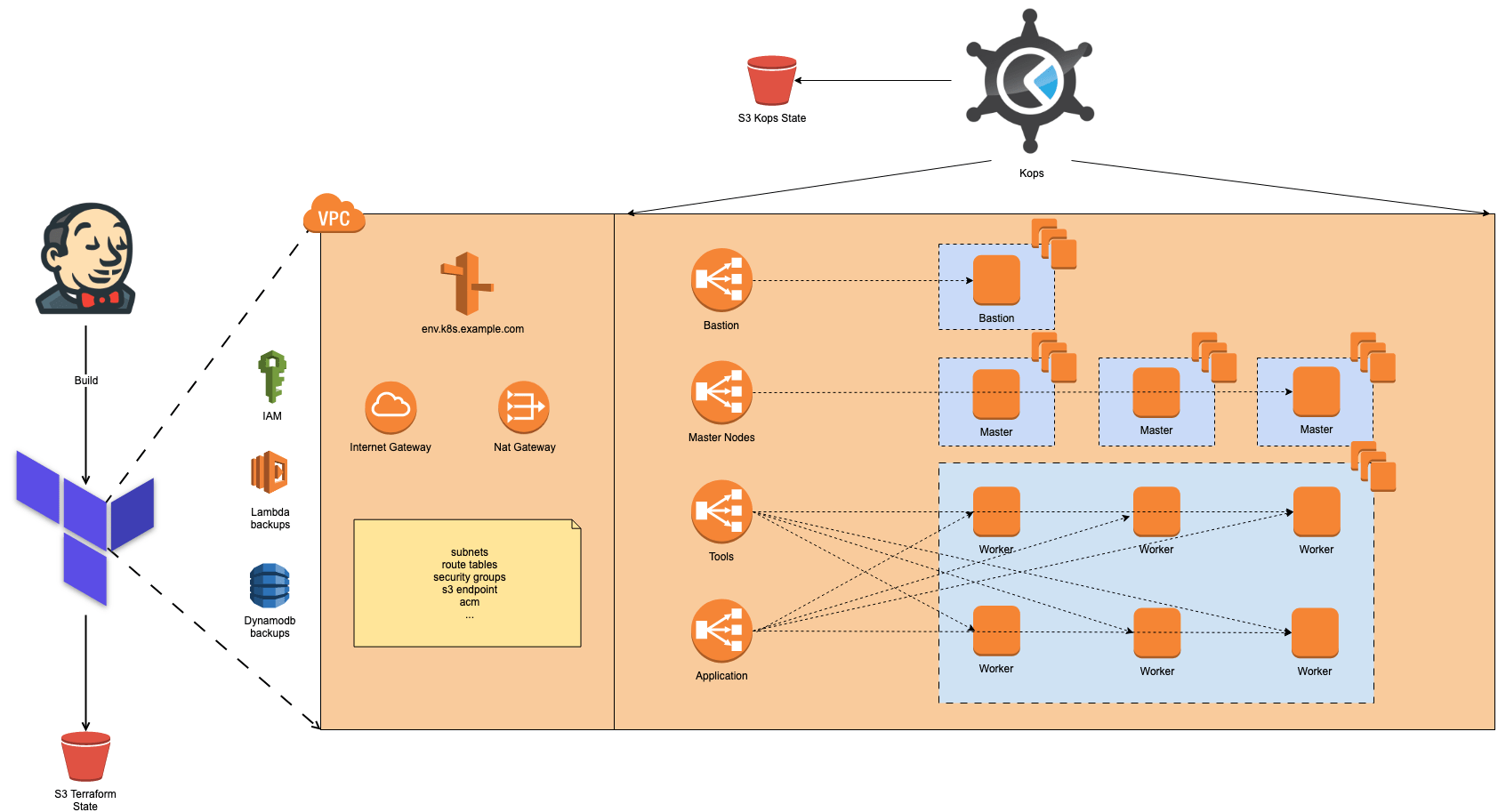

These were the core components of our toolset anno 2017:

- Terraform would deploy the AWS VPC, networking components and other dependencies for the Kubernetes cluster

- Kops for cluster creation and management

- An EFK stack for logging was deployed within the Kubernetes cluster

- Heapster, influxdb and grafana in combination with Librato for monitoring within the cluster

- Opsgenie for alerting

Nice! … but we can do better: reducing costs, components and downtime

Once we had completed our first setup, it became easier to use the same topology and we continued implementing this setup for other customers. Through our infrastructure-as-code approach (Terraform) in combination with a Kubernetes cluster management tool (Kops), the effort to create new clusters was relatively low.

However, after a while, we started to notice some possible risks related to this setup. The amount of work required for the setup and the impact of updates or upgrades on our Kubernetes stack was too large. At the same time, the number of customers that wanted their very own Kubernetes cluster was growing. So, we needed to make some changes to reduce maintenance effort on the Kubernetes part of this setup to keep things manageable for ourselves.

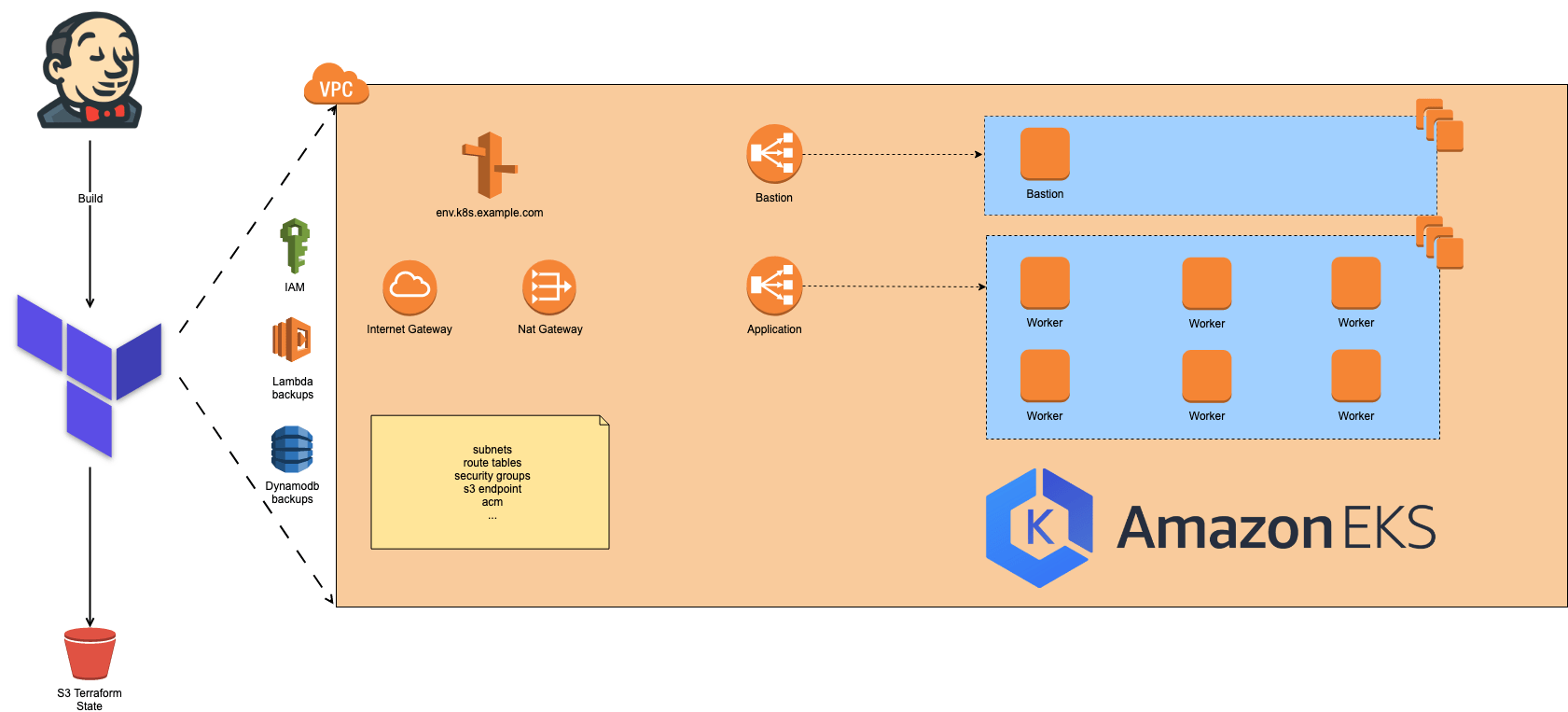

Migration to Amazon EKS and Datadog

At this point the Kubernetes service from AWS (Amazon EKS) became generally available. We were able to move all things that are managed by Kops to our Terraform code, making things a lot less complex. As an extra benefit, the Kubernetes master nodes are now managed by EKS. This means we now have less nodes to manage and EKS also provides us cluster upgrades with a touch of the button.

Apart from reducing the workloads on our Kubernetes management plane, we’ve also reduced the number of components within our cluster. In the previous setup we were using an EFK (ElasticSearch, Fluentd and Kibana) stack for our logging infrastructure. For our monitoring, we were using a combination of InfluxDB, Grafana, Heapster and Librato. These tools gave us a lot of flexibility but required a lot of maintenance effort, since they all ran within the cluster. We’ve replaced them all with Datadog agent, reducing our maintenance workloads drastically.

Upgrades in < 60 minutes

Furthermore, because of the migration to Amazon EKS and the reduction in the number of components running within the Kubernetes cluster, we were able to reduce the cost and availability impact of our cluster upgrades. With the current stack, using Datadog and Amazon EKS, we can upgrade a Kubernetes cluster within an hour. If we were to use the previous stack, it would take us about 10 hours on average.

So where are we now?

We currently have 16 Kubernetes clusters up and running, all running the latest available EKS version. Right now, we want to spread our love for Kubernetes wherever we can.

Multiple project teams within ACA Group are now using Kubernetes, so we are organizing workshops to help them get up to speed with the technology quickly. At the same time, we also try to catch up with the latest additions to this rapidly changing platform. That’s why we’ve attended the Kubecon conference in Barcelona and shared our opinions in our Kubecon Afterglow event.

What’s next?

Even though we are very happy with our current Kubernetes setup, we believe there’s always room for improvement. During our Kubecon Afterglow event, we’ve had some interesting discussions with other Kubernetes enthusiasts. These discussions helped us defining our next steps, bringing our Kubernetes setup to an even higher level. Some things we’d like to improve in the near future:

- add service mesh to our Kubernetes stack,

- 100% automatic worker node upgrades without application downtime.

Of course, these are just a few focus points. We’ll implement many new features and improvements whenever they are released!

What about you? Are you interested in your very own Kubernetes cluster? Which improvements do you plan on making to your stack or Kubernetes setup? Or do you have an unanswered Kubernetes question we might be able to help you with?

Contact us at cloud@aca-it.be and we will help you out!

What others have also read

In the complex world of modern software development, companies are faced with the challenge of seamlessly integrating diverse applications developed and managed by different teams. An invaluable asset in overcoming this challenge is the Service Mesh. In this blog article, we delve into Istio Service Mesh and explore why investing in a Service Mesh like Istio is a smart move." What is Service Mesh? A service mesh is a software layer responsible for all communication between applications, referred to as services in this context. It introduces new functionalities to manage the interaction between services, such as monitoring, logging, tracing, and traffic control. A service mesh operates independently of the code of each individual service, enabling it to operate across network boundaries and collaborate with various management systems. Thanks to a service mesh, developers can focus on building application features without worrying about the complexity of the underlying communication infrastructure. Istio Service Mesh in Practice Consider managing a large cluster that runs multiple applications developed and maintained by different teams, each with diverse dependencies like ElasticSearch or Kafka. Over time, this results in a complex ecosystem of applications and containers, overseen by various teams. The environment becomes so intricate that administrators find it increasingly difficult to maintain a clear overview. This leads to a series of pertinent questions: What is the architecture like? Which applications interact with each other? How is the traffic managed? Moreover, there are specific challenges that must be addressed for each individual application: Handling login processes Implementing robust security measures Managing network traffic directed towards the application ... A Service Mesh, such as Istio, offers a solution to these challenges. Istio acts as a proxy between the various applications (services) in the cluster, with each request passing through a component of Istio. How Does Istio Service Mesh Work? Istio introduces a sidecar proxy for each service in the microservices ecosystem. This sidecar proxy manages all incoming and outgoing traffic for the service. Additionally, Istio adds components that handle the incoming and outgoing traffic of the cluster. Istio's control plane enables you to define policies for traffic management, security, and monitoring, which are then applied to the added components. For a deeper understanding of Istio Service Mesh functionality, our blog article, "Installing Istio Service Mesh: A Comprehensive Step-by-Step Guide" , provides a detailed, step-by-step explanation of the installation and utilization of Istio. Why Istio Service Mesh? Traffic Management: Istio enables detailed traffic management, allowing developers to easily route, distribute, and control traffic between different versions of their services. Security: Istio provides a robust security layer with features such as traffic encryption using its own certificates, Role-Based Access Control (RBAC), and capabilities for implementing authentication and authorization policies. Observability: Through built-in instrumentation, Istio offers deep observability with tools for monitoring, logging, and distributed tracing. This allows IT teams to analyze the performance of services and quickly detect issues. Simplified Communication: Istio removes the complexity of service communication from application developers, allowing them to focus on building application features. Is Istio Suitable for Your Setup? While the benefits are clear, it is essential to consider whether the additional complexity of Istio aligns with your specific setup. Firstly, a sidecar container is required for each deployed service, potentially leading to undesired memory and CPU overhead. Additionally, your team may lack the specialized knowledge required for Istio. If you are considering the adoption of Istio Service Mesh, seek guidance from specialists with expertise. Feel free to ask our experts for assistance. More Information about Istio Istio Service Mesh is a technological game-changer for IT professionals aiming for advanced control, security, and observability in their microservices architecture. Istio simplifies and secures communication between services, allowing IT teams to focus on building reliable and scalable applications. Need quick answers to all your questions about Istio Service Mesh? Contact our experts

Read more

On December 7 and 8, 2023, several ACA members participated in CloudBrew 2023 , an inspiring two-day conference about Microsoft Azure. In the scenery of the former Lamot brewery, visitors had the opportunity to delve into the latest cloud developments and expand their network. With various tracks and fascinating speakers, CloudBrew offered a wealth of information. The intimate setting allowed participants to make direct contact with both local and international experts. In this article we would like to highlight some of the most inspiring talks from this two-day cloud gathering: Azure Architecture: Choosing wisely Rik Hepworth , Chief Consulting Officer at Black Marble and Microsoft Azure MVP/RD, used a customer example in which .NET developers were responsible for managing the Azure infrastructure. He engaged the audience in an interactive discussion to choose the best technologies. He further emphasized the importance of a balanced approach, combining new knowledge with existing solutions for effective management and development of the architecture. From closed platform to Landing Zone with Azure Policy David de Hoop , Special Agent at Team Rockstars IT, talked about the Azure Enterprise Scale Architecture, a template provided by Microsoft that supports companies in setting up a scalable, secure and manageable cloud infrastructure. The template provides guidance for designing a cloud infrastructure that is customizable to a business's needs. A critical aspect of this architecture is the landing zone, an environment that adheres to design principles and supports all application portfolios. It uses subscriptions to isolate and scale application and platform resources. Azure Policy provides a set of guidelines to open up Azure infrastructure to an enterprise without sacrificing security or management. This gives engineers more freedom in their Azure environment, while security features are automatically enforced at the tenant level and even application-specific settings. This provides a balanced approach to ensure both flexibility and security, without the need for separate tools or technologies. Belgium's biggest Azure mistakes I want you to learn from! During this session, Toon Vanhoutte , Azure Solution Architect and Microsoft Azure MVP, presented the most common errors and human mistakes, based on the experiences of more than 100 Azure engineers. Using valuable practical examples, he not only illustrated the errors themselves, but also offered clear solutions and preventive measures to avoid similar incidents in the future. His valuable insights helped both novice and experienced Azure engineers sharpen their knowledge and optimize their implementations. Protecting critical ICS SCADA infrastructure with Microsoft Defender This presentation by Microsoft MVP/RD, Maarten Goet , focused on the use of Microsoft Defender for ICS SCADA infrastructure in the energy sector. The speaker shared insights on the importance of cybersecurity in this critical sector, and illustrated this with a demo demonstrating the vulnerabilities of such systems. He emphasized the need for proactive security measures and highlighted Microsoft Defender as a powerful tool for protecting ICS SCADA systems. Using Azure Digital Twin in Manufacturing Steven De Lausnay , Specialist Lead Data Architecture and IoT Architect, introduced Azure Digital Twin as an advanced technology to create digital replicas of physical environments. By providing insight into the process behind Azure Digital Twin, he showed how organizations in production environments can leverage this technology. He emphasized the value of Azure Digital Twin for modeling, monitoring and optimizing complex systems. This technology can play a crucial role in improving operational efficiency and making data-driven decisions in various industrial applications. Turning Azure Platform recommendations into gold Magnus Mårtensson , CEO of Loftysoft and Microsoft Azure MVP/RD, had the honor of closing CloudBrew 2023 with a compelling summary of the highlights. With his entertaining presentation he offered valuable reflection on the various themes discussed during the event. It was a perfect ending to an extremely successful conference and gave every participant the desire to immediately put the insights gained into practice. We are already looking forward to CloudBrew 2024! 🚀

Read more

Like every year, Amazon held its AWS re:Invent 2021 in Las Vegas. While we weren’t able to attend in person due to the pandemic, as an AWS Partner we were eager to follow the digital event. Below is a quick rundown of our highlights of the event to give you a summary in case you missed it! AWS closer to home AWS will build 30 new ‘ Local Zones ’ in 2022, including one in our home base: Belgium. AWS Local Zones are a type of infrastructure deployment that places compute, storage, database, and other select AWS services close to large population and industry centers. The Belgian Local Zone should be operational by 2023. Additionally, the possibilities of AWS Outposts have increased . The most important change is that you can now run far more services on your own server delivered by AWS. Quick recap: AWS Outposts is a family of fully managed solutions delivering AWS infrastructure and services to virtually any on-premises or edge location for a consistent hybrid experience. Outposts was previously only available in a 42U Outposts rack configuration. From now on, AWS offers a variety of form factors, including 1U and 2U Outposts servers for when there’s less space available. We’re very tempted to get one for the office… AWS EKS Anywhere was previously announced, but is now a reality! With this service, it’s possible to set up a Kubernetes cluster on your own infrastructure or infrastructure from your favorite cloud provider, while still managing it through AWS EKS. All the benefits of freedom of choice combined with the unified overview and dashboard of AWS EKS. Who said you can’t have your cake and eat it too? Low-code to regain primary focus With Amplify Studio , AWS takes the next step in low-code development. Amplify Studio is a fully-fledged low-code generator platform that builds upon the existing Amplify framework. The platform allows users to build applications through drag and drop with the possibility of adding custom code wherever necessary. Definitely something we’ll be looking at on our next Ship-IT Day! Machine Learning going strong(er) Ever wanted to start with machine learning, but not quite ready to invest some of your hard-earned money? With SageMaker Studio Lab , AWS announced a free platform that lets users start exploring AI/ML tools without having to register for an AWS account or leave credit card details behind. You can try it yourself for free in your browser through Jupyter notebooks ! Additionally, AWS announced SageMaker Canvas : a visual, no-code machine learning capability for business analysts. This allows them to get started with ML without having extensive experience and get more insights in data. The third chapter in the SageMaker saga consists of SageMaker Ground Truth Plus . With this new service, you hire a team of experts to train and label your data, a traditionally very labor intensive process. According to Amazon, customers can expect to save up to 40% through SageMaker Ground Truth Plus. There were two more minor announcements: the AI ML Scholarschip Program , a free program for students to get to know ML tools, and Lex Automated Chatbot Designer , which lets you quickly develop a smart chatbot with advanced natural language processing support. Networking for everyone Tired of less than optimal reception or a slow connection? Why not build your own private 5G network? Yep: with AWS Private 5G , Amazon delivers the hardware, management and sim cards for you to set up your very own 5G network. Use cases (besides being fed up with your current cellular network) include warehouses or large sites (e.g. a football stadium) that require low latency, excellent coverage and a large bandwidth. The best part? Customers only pay for the end user’s usage of the network. Continuing the network theme, there’s now AWS Cloud WAN . This service allows users to build a managed WAN (Wide Area Network) to connect cloud and on-premise environments with a central management UI on a network components level as well as service level. Lastly, there’s also AWS Workspaces Web . Through this service, customers can grant employees safe access to internal website and SaaS applications. The big advantage here is that information critical to the company never leaves the environment and doesn’t leave any traces on workstations, thanks to a non-persistent web browser. Kubernetes anyone? No AWS event goes without mentioning Kubernetes, and AWS re:Invent 2021 is no different. Amazon announced two new services in the Kubernetes space: AWS Karpenter and AWS Marketplace for Containers Anywhere . With AWS Karpenter, managing autoscaling Kubernetes infrastructure becomes both simpler and less restrictive. It takes care of automatically starting compute when the load of an application changes. Interestingly, Karpenter is fully open-source, a trend which we’ll see more and more according to Amazon. AwS Marketplace for Containers Anywhere is primarily useful for customers who’ve already fully committed to container managed platforms. It allows users to search, subscribe and deploy 3rd party Kubernetes apps from the AWS Marketplace in any Kubernetes cluster, no matter the environment. IoT updates There have been numerous smaller updates to AWS’s IoT services, most notably to: GreenGrass SSM , which now allows you to securely manage your devices using AWS Systems Manager Amazon Monitron to predict when maintenance is required for rotating parts in machines AWS IoT TwinMaker , to simply make Digital Twins of real-world systems AWS IoT FleetWise , whichs helps users to collect vehicle data in the cloud in near-real time. Upping the serverless game In the serverless landscape, AWS announced serverless Redshift , EMR , MSK , and Kinesis . This enables to set up services while the right instance type is automatically linked. If the service is not in use, the instance automatically stops. This way, customers only pay for when a service is actually being used. This is particularly interesting for experimental services and integrations in environments which do not get used very often. Sustainability Just like ACA Group’s commitment to sustainability , AWS is serious about their ambition towards net-zero carbon by 2040. They’ve developed the AWS Customer Carbon Footprint tool, which lets users calculate carbon emissions through their website . Other announcements included AWS Mainframe Modernization , a collection of tools and guides to take over existing mainframes with AWS, and AWS Well-Architected Framework , a set of design principles, guidelines, best practices and improvements to validate sustainability goals and create reports. We can't wait to start experimenting with all the new additions and improvements announced at AWS re:Invent 2021. Thanks for reading! Discover our cloud hosting services

Read moreWant to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!

Want to dive deeper into this topic?

Get in touch with our experts today. They are happy to help!